Nvidia's high-profile announcement: GH200 super chip is coming!

Nvidia's product releases are deeply branded with the times.。Five years ago, Nvidia introduced artificial intelligence and real-time ray tracing technology into GPUs, and today, five years later, when the rapid development of artificial intelligence puts forward higher computing power requirements for chip manufacturers, Nvidia is once again "coming to see."

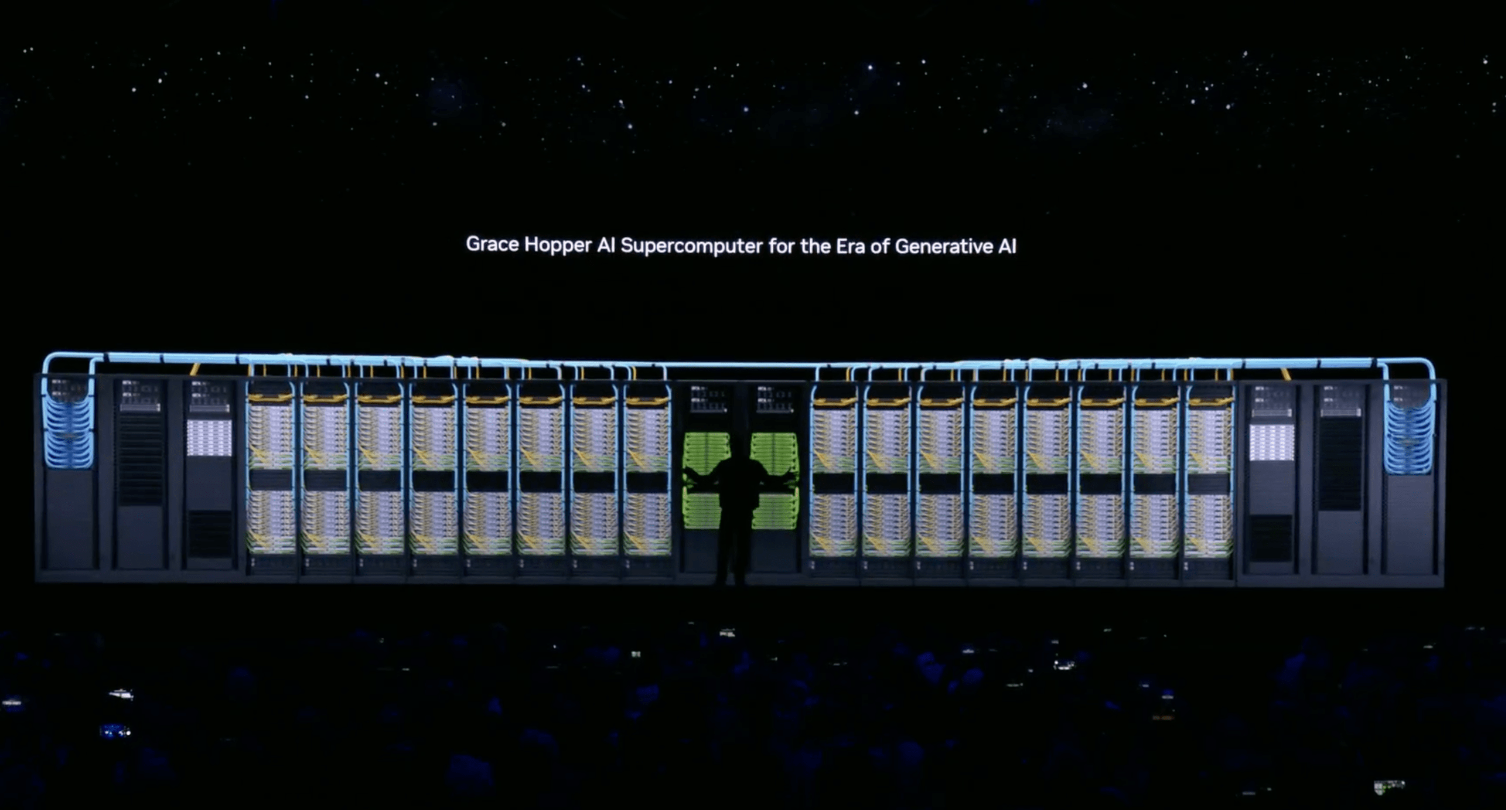

On August 8, local time, Nvidia CEO Huang Renxun once again landed at the computer graphics professional conference SIGGRAPH and released a series of products, including the GH200 Grace Hopper super chip platform known as the GPU King.。

Nvidia's product releases are deeply branded with the times.。Five years ago, Nvidia redefined computer graphics by bringing artificial intelligence and real-time ray tracing to GPUs.。This is followed by the NVIDIA HGX H100 with integrated 8 GPUs and 1 trillion transistors。

Today, five years later, when the rapid development of artificial intelligence puts forward higher computing power requirements for chip manufacturers, NVIDIA is once again "coming to see" - GH200 is specifically designed to accelerate the era of computing and generative artificial intelligence, the goal is to handle the world's most complex generative artificial intelligence workloads。

Not only for computing power-supported superchips, this time, Nvidia released a series of updates around the entire ecosystem of generative AI, strongly announcing that its product matrix is currently the strongest engine for artificial intelligence training.。

GH200 & NVIDIA NVLink ™ Servers

There is no doubt that the highlight of this Nvidia release is the GH200。This time, Nvidia for this full name Grace Hopper super chip directly with the world's first HBM3e processor。It is understood that this new HBM3e memory is 50% faster than the current HBM3, with a combined bandwidth of 10TB / sec, so that the new platform can run 3.5 times the model。

This is not over, as Nvidia's "King Fried Chip," GH200 will be based on Arm's Nvidia Grace CPU and Hopper GPU architecture to do an effective integration, so that GH200 GPU can directly mark the current AI chip ceiling level H100.。

The difference is that the H100 has 80GB of RAM, while the new GH200 will have up to 141GB of RAM and can be paired with a 72-core ARM CPU。After configuration optimization, not only the performance is greatly improved, but the GH200 can also perform AI inference functions to effectively support generative AI applications such as ChatGPT。

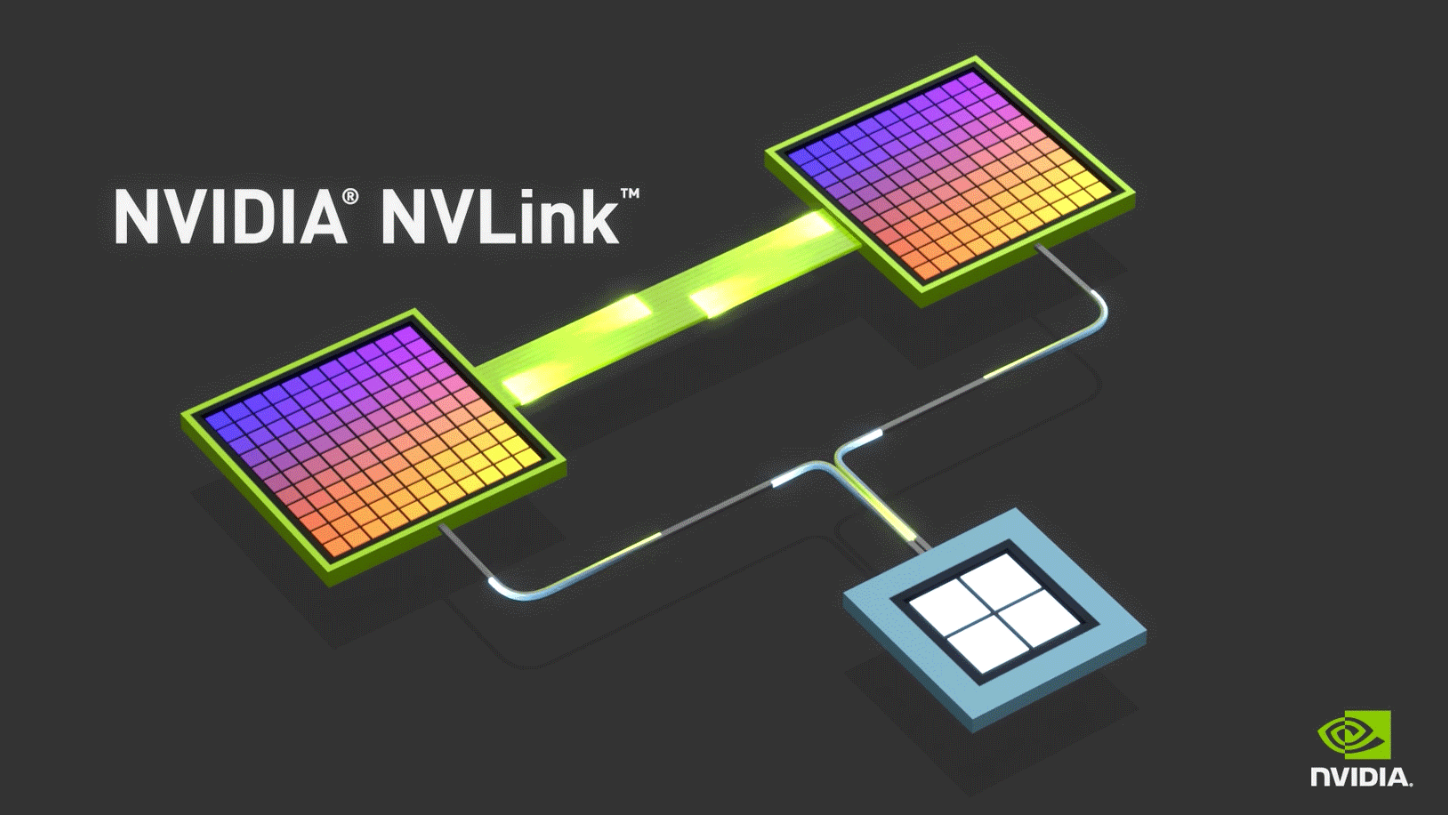

Based on its powerful performance, Nvidia expanded directly on the GH200 and released NVIDIA NVLink ™ servers to expand the chip.。NVIDIA NVLink ™ will allow the Grace Hopper superchip to be combined with other superchip connections, a technology solution that provides GPUs with full access to CPU memory。

Nvidia said in its Technical Blog that it is currently developing a new dual GH200 base NVIDIA MGX server system that will integrate two next-generation Grace Hopper superchips。In the new dual GH200 server, the CPUs and GPUs within the system will be connected through a completely consistent memory interconnect. This super GPU can run as a whole, providing 144 Grace CPU cores, 8 trillion times of computing performance, and 282GB of HBM3e memory, which can be suitable for the giant model of generative AI。

Currently, Nvidia plans to sell two versions of the GH200: one that contains two chips that customers can integrate into the system, and the other is a complete server system that combines two Grace Hopper designs.。

RTX Matrix& RTX Workstation

In addition to the powerful Grace Hopper chip, Nvidia also intends to bind the market's mainstream generative AI working procedures with its own products, and has launched three new GPUs: RTX 5000, RTX 4500 and RTX 4000。

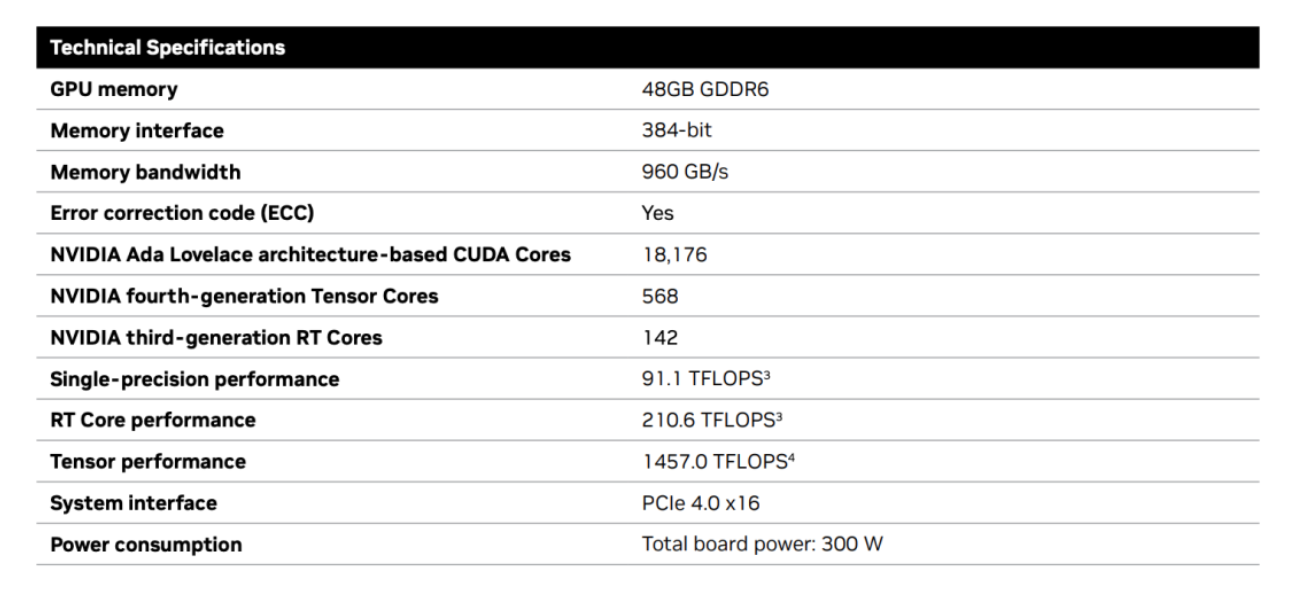

Last year, NVIDIA's RTX 6000 became the industry ceiling once it was released, killing the industry with 48GB of video memory, 18,176 CUDA cores, 568 Tensor cores, 142 RT cores, and up to 960GB / s of bandwidth.。

Inspired by the ceiling, the new product configuration launched by Nvidia did not disappoint: RTX 5000 is equipped with 32GB of video memory, 12,800 CUDA cores, 400 Tensor cores, and 100 RT cores; RTX 4500 is equipped with 24GB of video memory, 7,680 CUDA cores, 240 Tensor cores, and 60 RT cores; RTX 4000 is equipped with 20GB of video memory, 6,144 CUDA cores,。

Based on these three GPUs, Nvidia aims to provide enterprise customers with the latest AI graphics and real-time rendering technology, and has launched a one-stop solution RTX Workstation。In this scenario, RTX Workstation can support up to 4 RTX 6000 GPUs, enabling it to be completed in 15 hours 8.The fine-tuning of the 600 million token GPT3-40B also allows the Stable Diffusion XL to generate 40 images per minute, five times faster than the original 4090。

AI Workbench

In addition to the hardware, this time Nvidia has also worked hard on the software.。

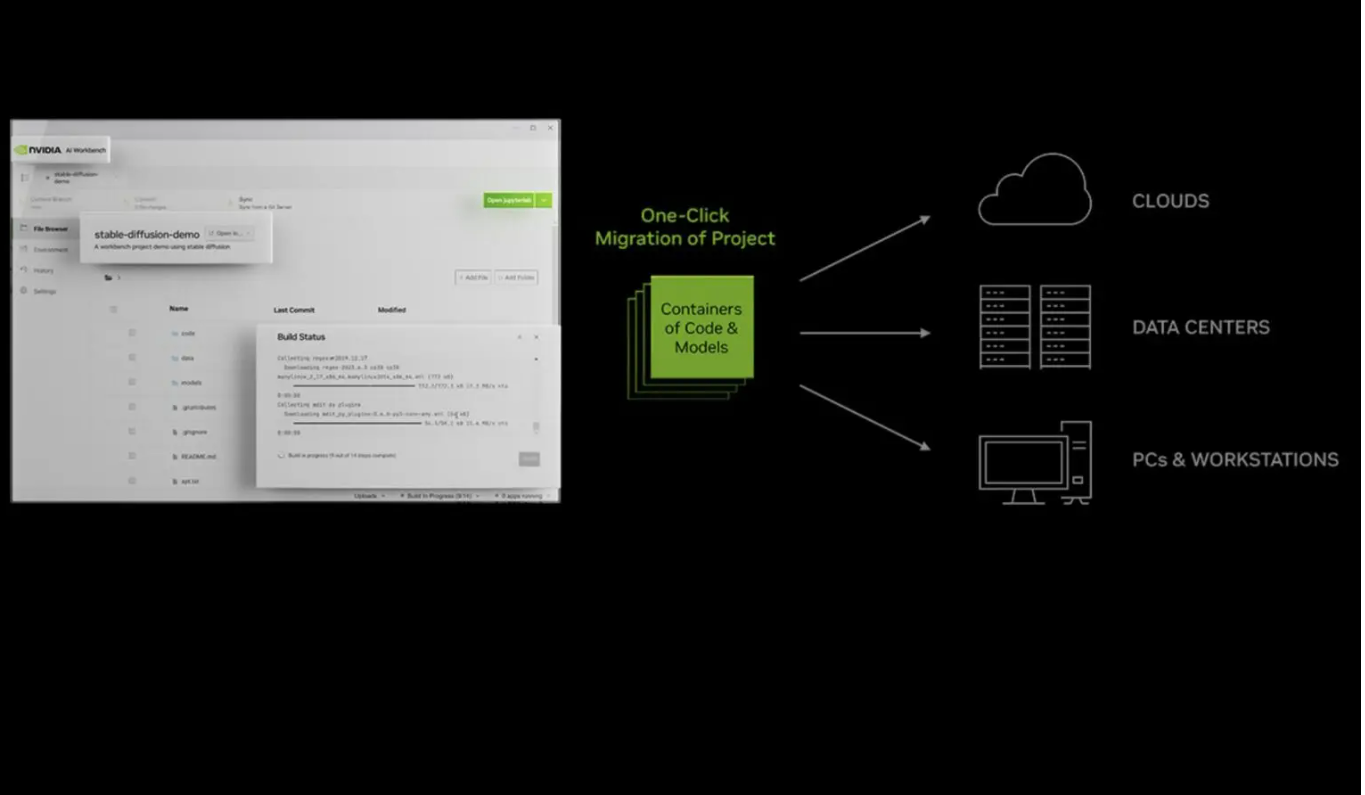

At SIGGRAPH, Huang also launched a new AI service platform, NVIDIA AI Workbench, to assist users in developing and deploying generative AI models, and then scaling them to almost any data center, public cloud or NVIDIA DGX ™ cloud.。

In Nvidia's statement, the current enterprise-level AI development process is too cumbersome and complex, not only requires finding the right framework and tools in multiple libraries, but the process can become more challenging when a project needs to be migrated from one infrastructure to another.。

As a result, AI Workbench provides a simple user interface that enables developers to consolidate models, frameworks, SDKs, and libraries from open source resources into a unified workspace that can be run on a local computer and connected to HuggingFace, Github, and other popular open source or commercial AI code repositories。In other words, developers can easily access most of the resources needed for AI development on one interface without opening different browser windows。

Nvidia says the benefits of AI Workbench include:

- Easy to use: AI Workbench simplifies development by providing a single platform to manage data, models, and compute resources, enabling collaboration across machines and environments。

- Integrated AI development tools and repositories: AI Workbench integrates with GitHub, NVIDIA NGC, Hugging Face and other services, and developers can use tools such as JupyterLab and VS Code to develop on different platforms and infrastructures。

- Enhanced collaboration: The project structure helps automate complex tasks around version control, container management, and handling confidential information, while also supporting cross-team collaboration。

- Access to accelerated computing resources: AI Workbench deployments are client-server models where users can start developing on local computing resources in their workstations and move to data center or cloud resources as their training jobs scale up。

AI infrastructure providers such as Dell, HP, Lambda and Lenovo have adopted AI Workbench services and see the potential to enhance the latest generation of multi-GPU capabilities。In practical use cases, Workbench can help users move from development on a single PC to larger-scale environments, helping projects go into production while all software remains the same。

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.