Meta debuts new generation of AI chip performance than the previous generation increased by 3 times!

Meta stated that early results indicated that among the four key models evaluated, the performance of the new generation MTIA chip was three times higher than that of the first generation chip.

On April 10th, Meta announced the launch of the next-generation Training and Inference Accelerator (MTIA). MTIA is a custom chip series designed by Meta specifically for AI workloads.

Last May, Meta launched MTIA v1, the company's first generation artificial intelligence inference accelerator. MTIA v1 is designed to perfectly complement Meta's high-quality recommendation model. This series of chips can help improve training efficiency and make practical inference tasks easier.

Meta stated that the computing and memory bandwidth of the new generation MTIA has more than doubled compared to previous solutions, while maintaining a close connection with the workload.

According to the official introduction, the new generation MTIA consists of 8x8 grid processing elements (PE). These PEs greatly improve dense computing performance (3.5 times that of MTIA v1) and sparse computing performance (7 times higher). The new generation MTI design also adopts an improved Network on Chip (NoC) architecture, doubling bandwidth and allowing for coordination between different PEs with low latency.

To support the new generation of chips, Meta has also developed a large rack mounted system that can accommodate up to 72 accelerators. It consists of three chassis, each containing 12 boards, each containing two accelerators.

In addition, Meta will upgrade the structure between accelerators and between hosts to PCIe Gen5 to improve system bandwidth and scalability.

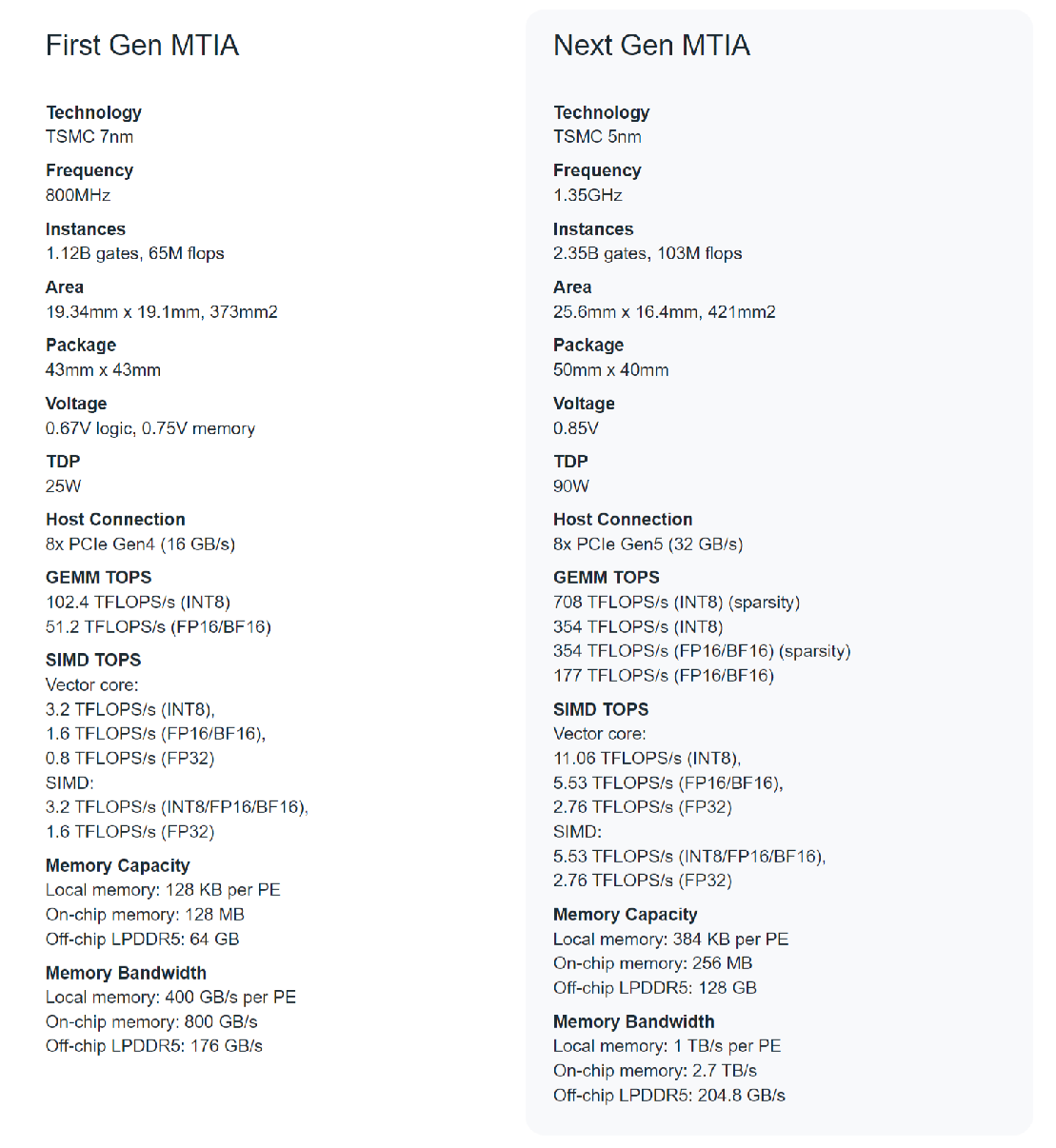

Comparing the two generations of MTIA chips, the new generation of MTIA chips adopts TSMC's 5nm process technology, with 256MB of on-chip memory and a frequency of 1.3GHz, while MTIA v1's on-chip memory is 128MB and a frequency of 800GHz, using TSMC's 7nm process technology.

The average frequency of the new generation MTIA chip reaches 1.35GHz, which is much higher than the 800MHz of MTIA v1, but at the same time, it consumes more than three times more power (90W) than MTIA v1 (25W).

Meta stated that early results indicated that among the four key models evaluated, the performance of the new generation MTIA chip was three times higher than that of the first generation chip. At the platform level, with twice the number of devices and powerful dual slot CPUs, Meta's model service throughput has increased by 6 times, and the performance per watt has increased by 1.5 times compared to the first generation MTIA system.

Meta stated, "It has been proven that it (referring to the next-generation MTIA) is highly complementary to commercial GPUs in providing the best combination of performance and efficiency for specific workloads."

Meta points out that the MTIA series of chips will become a long-term investment for the company, aimed at building and scaling the most powerful and efficient infrastructure for Meta's unique artificial intelligence workloads.

Meta also stated that the company is designing custom chips internally to work in conjunction with its existing infrastructure and new, more advanced hardware that may be utilized in the future, including next-generation GPUs. "Realizing our ambition for custom chips means investing not only in computing chips, but also in memory bandwidth, network and capacity, and other next-generation hardware systems."

However, Meta did not specify whether the company is currently using the next-generation MTIA to handle generative AI training workloads. Meta only stated that it is currently "working on multiple plans" to expand the scope of MTIA, including support for GenAI workloads.

Last October, Meta's CEO Zuckerberg stated that "artificial intelligence will be our biggest investment area in 2024." The company will invest up to $35 billion to support the construction of artificial intelligence infrastructure, including data centers and hardware.

Although Meta is ambitious, it faces considerable pressure from its peers in terms of self-developed chips.

Last November, Microsoft launched two self-developed AI chips - Azure Maia 100 and Azure Cobalt 100, to enhance Azure AI and Microsoft Copilot services.

At the end of last year, while launching the new Gemini Large Language Model (LLM), Google also released the fifth generation custom chip TPU v5p for training artificial intelligence models. Google stated that TPU v5p can train LLMs like GPT3-175B. This week, Google has started launching TPU v5p to its Google Cloud customers.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.