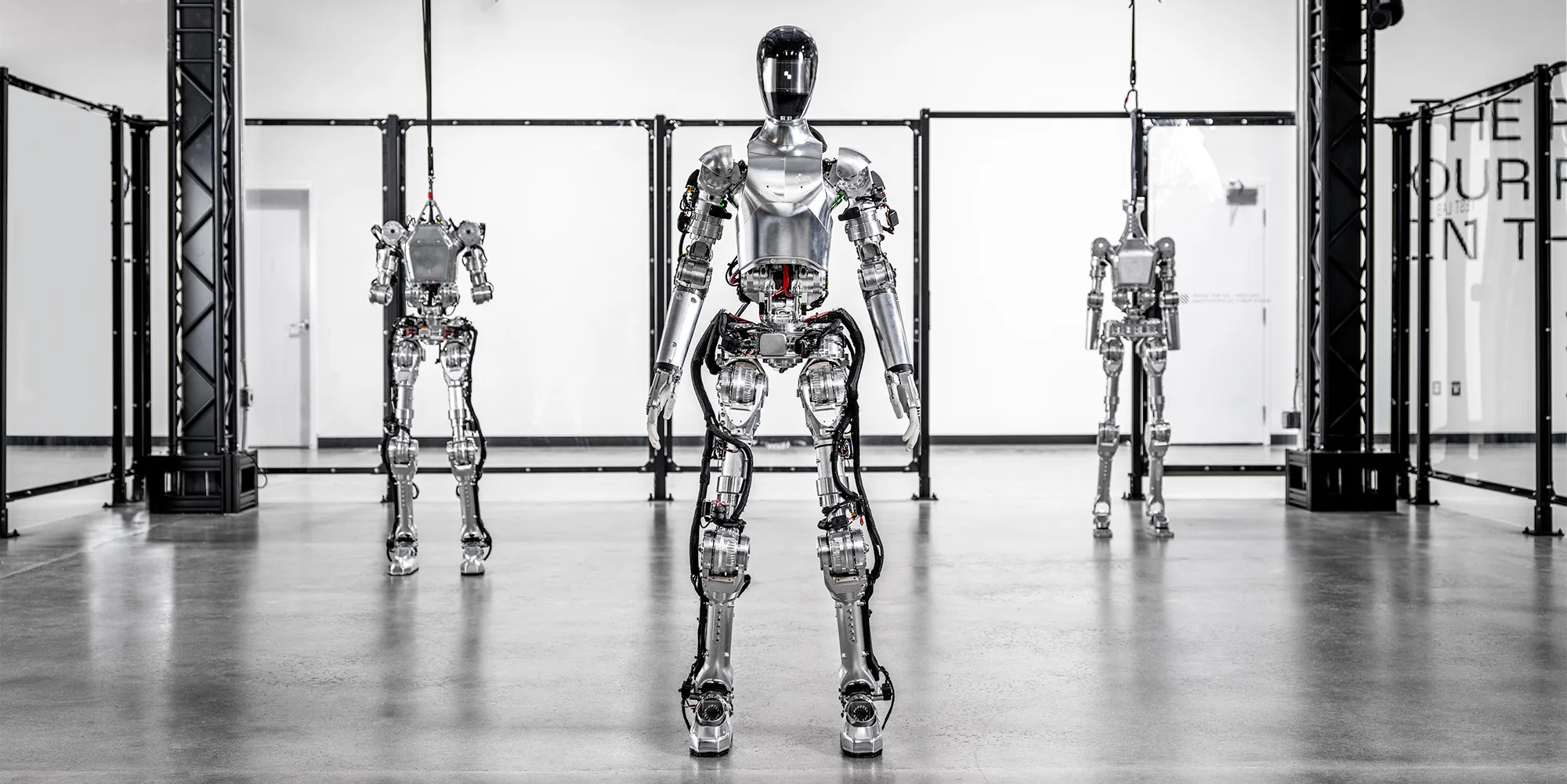

Figure "Humanoid GPT" debut: OpenAI big model blessing has been tested in the factory

On March 14, Figure released its first robot demo, Figure 01, which is the first result of the company's cooperation with OpenAI to enhance the capabilities of humanoid robots.。

On March 14, local time, star start-up company Figure released the first robot demo - Figure 01, which is also the first results of the company's cooperation with OpenAI to enhance the capabilities of humanoid robots.。

Although only one neural network is used, a series of official videos show that Figure 01 can interact with humans, understand and execute human instructions, and the whole set of operations is very smooth.。

Last month, Figure just received from OpenAI, Microsoft, Nvidia and other about 6.A $7.5 billion investment to develop humanoid robots that supplement the workforce for repetitive and dangerous warehouse and retail jobs soared to a $2.6 billion valuation.。

At the same time, the company also signed a cooperation agreement with OpenAI - to extend the capabilities of multimodal large models (VLM) to the perception, reasoning and interaction of robots, that is, "embodied intelligence."。

And now the official release of Figure 01, just 13 days after its just completed B round of financing。

Figure 01 Video Demo

According to the video released by Figure, Figure 01 can smoothly perform operations such as handing apples, collecting garbage into baskets, and placing cups and dishes on the drain rack。

Importantly, most of Figure 01's actions and answers are based on open questions and requests from the questioner, and the solution is based on its own logical thinking, which means it can talk, think, learn, and be more "human" than the average robot.。

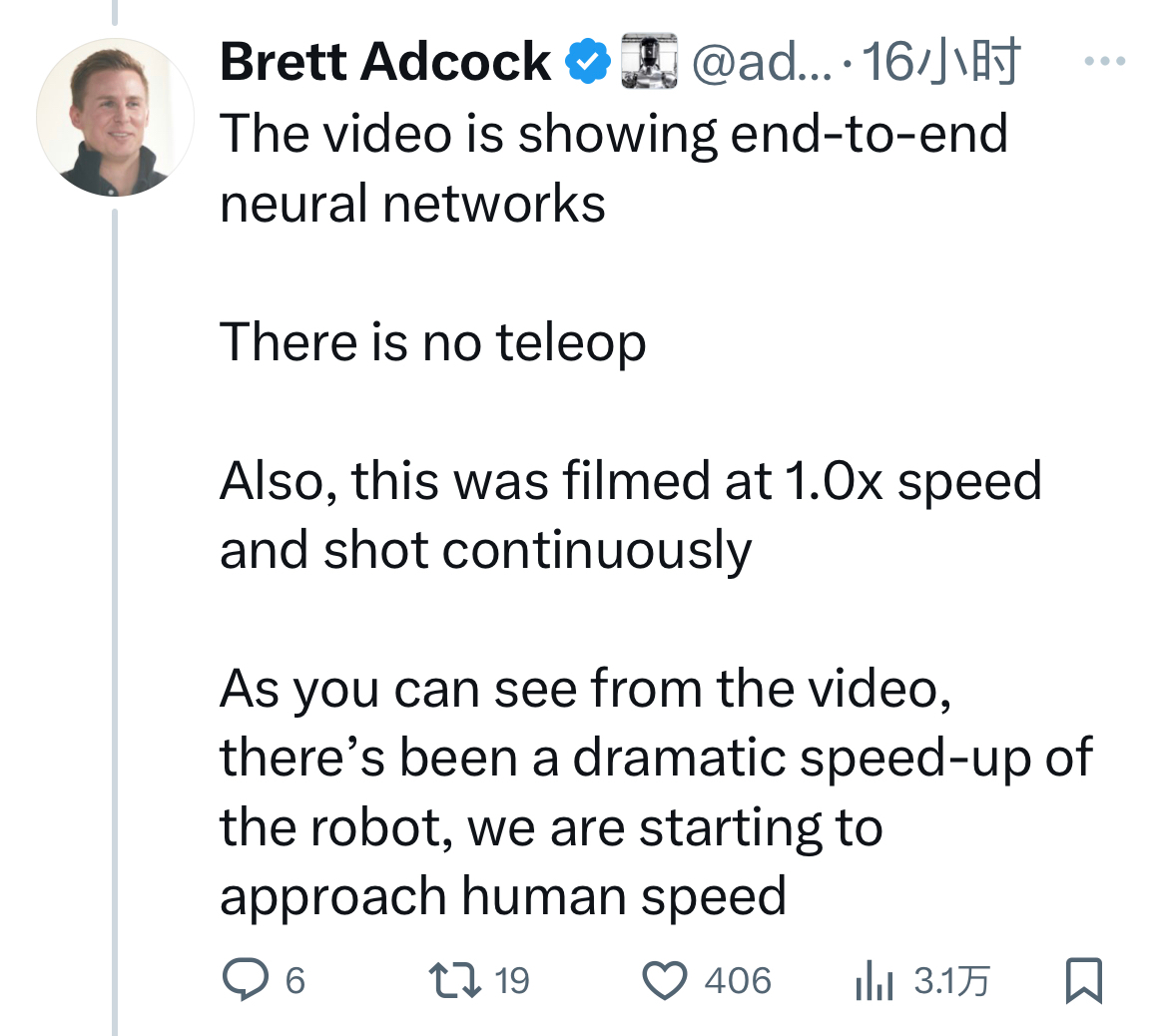

At the beginning of the video, Figure states that the robot's behavior is based on the logical reasoning of speech, and uses an end-to-end neural network, and the whole scene is shot to the end without any acceleration or editing.。

Figure founder Brett Adcock also stressed in his tweet that all the behavior of Figure 01 is obtained through learning, without any remote operation, and that the speed of the robot has increased significantly compared to before, and is gradually approaching the speed of humans.。

Figure 01 "The Most Powerful Brain"

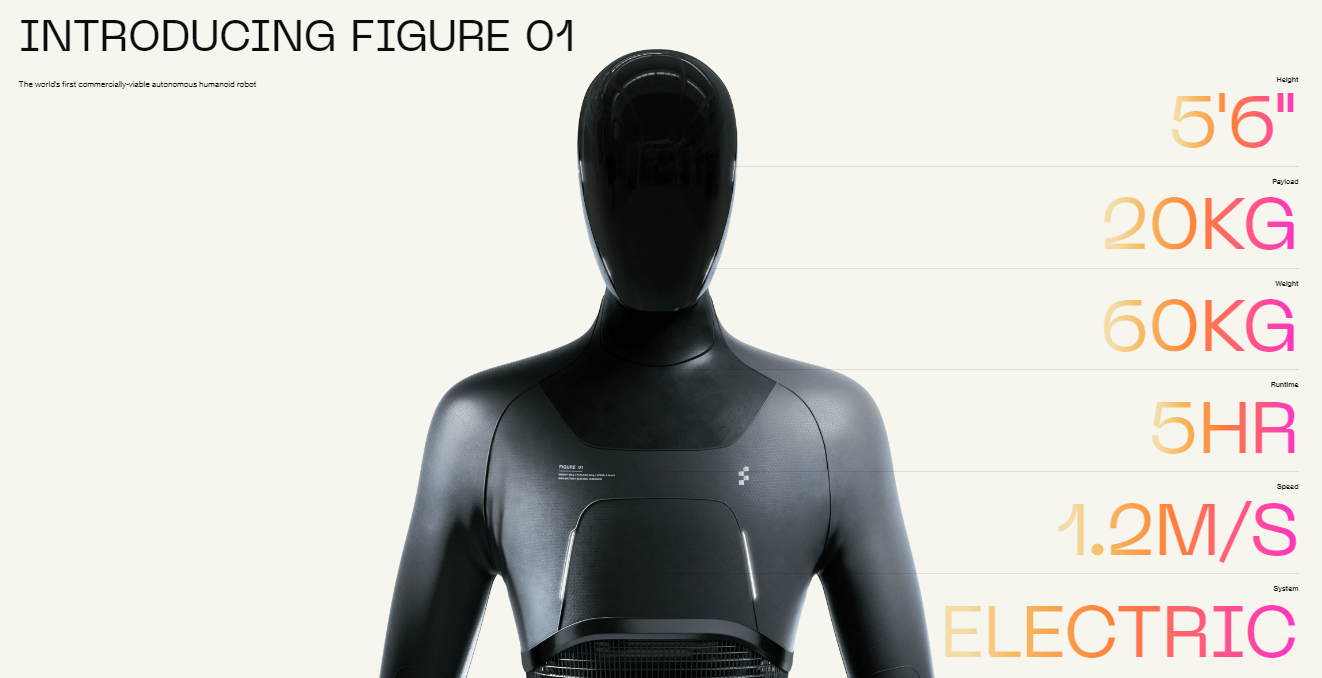

Fugure 01 is the world's first commercially viable universal humanoid robot。The robot is 5 feet 6 inches tall (about 1.68 meters), weight 60 kg, can achieve a payload of 20 kg, battery life of 5 hours, can move 1 per second.2 m。

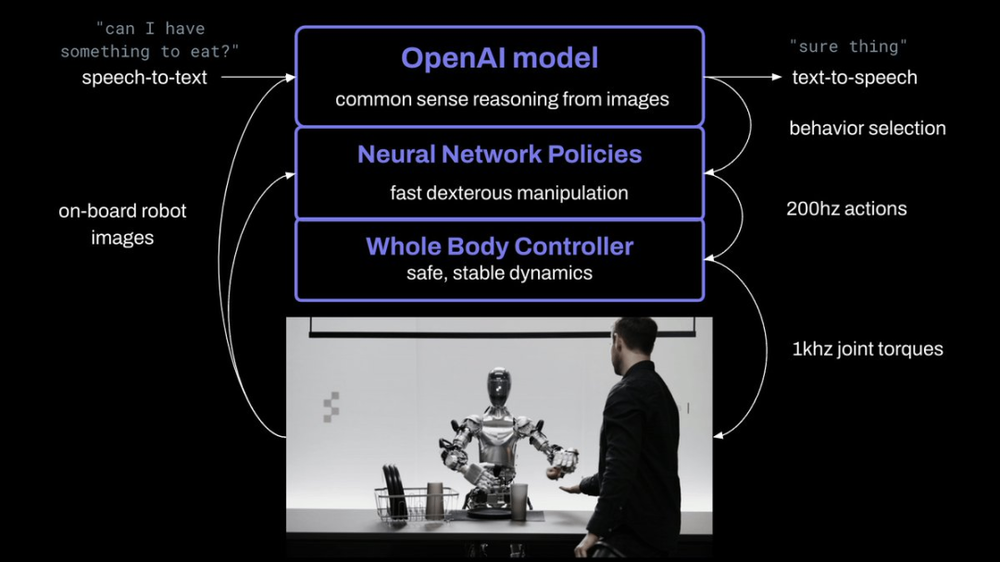

In Figure 01, the OpenAI large model provides high-level visual and linguistic intelligence, while Figure's neural networks support fast, low-level, and dexterous robot actions.。

Earlier this month, Figure announced it would develop an AI model for the next generation of humanoid robots based on OpenAI's latest GPT model, and specifically train the robot's motion data collected by Figure so that its humanoid robots can talk to people, see things and perform complex tasks.。

After the release of the finished video, Figure 01 senior AI engineer Corey Lynch detailed the technical principles of its operation on X: Figure 01 can describe its visual experience, plan future actions, reflect on its own memory and verbally state the reasoning process。

Specifically, the robot's speech capabilities are based on a large "text-speech" model.。Figure AI transcribes the images taken by the robot camera and the speech captured by the microphone into text, which is input into the multi-modal model trained by OpenAI to achieve simultaneous understanding of images and text.。After that, the model packages all the information and generates the language response from the text.。

At the execution stage, the same model is responsible for deciding which internalized closed-loop behavior to respond to a given instruction, loading specific neural network weights into the GPU (graphics processor) to execute the appropriate strategy。

Brett Adcock also mentioned in X that Figure integrates all the key components of Figure 01, such as motors, middleware operating systems, sensors, mechanical structures, etc., all designed by Figure engineers。

The future has come: body intelligent landing

Nvidia founder and CEO Huang Renxun once said: "Body intelligence will lead the next wave of AI。"

Figure was founded in 2022, and before working with OpenAI, the company had already gained a lot in AI.。At that time, Brett Adcock had already revealed that Figure would focus on developing humanoid robots covering AI systems, low-level control and other functions in the next 1-2 years.。

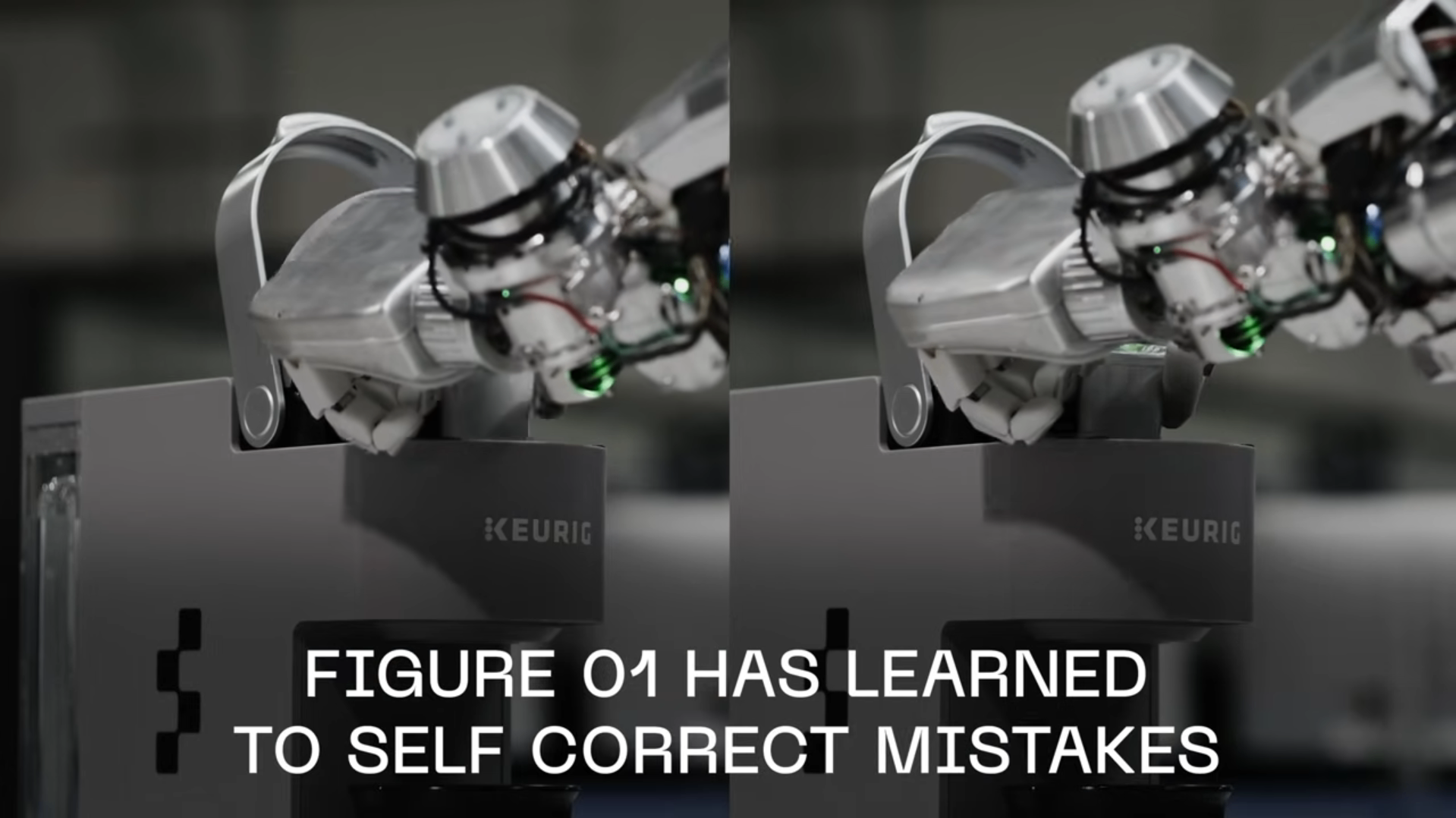

In January 2024, Figure 01 achieved self-correction by introducing an end-to-end neural network, and learned to make coffee after 10 hours of training; in February, Figure 01 was already performing handling tasks in the warehouse, with autonomous navigation, object recognition and The ability to prioritize tasks, but the speed is only 16.7%。

In addition, Figure is also actively trying to transform to the scene landing。Recently, Figure signed a commercial agreement of intent with BMW to deploy general-purpose robots to automotive manufacturing, and Figure 01 has begun testing at a plant in South Carolina, USA.。

Although many AI researchers believe that the popularity of general-purpose robots will be decades away, robotics expert Eric Jang cautions: "Don't forget that ChatGPT was born almost overnight.。"

Figure 01 may have a higher price with the blessing of OpenAI's big model, but Figure has not yet revealed anything about it, but Brett Adcock has shown optimism about the appropriate price reduction for Figure 01。

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.