MAI-1, Microsoft's Own AI LLM Incubation

Microsoft is developing a in-house new LLM MAI-1 with around 500 billion parameters, which may debut in this month.

Currently, AI has evidently become a major agenda for Microsoft. After collaborating with OpenAI to develop industry-leading LLMs, Microsoft seems not content with stopping there.

Microsoft Research has continuously released multiple small-scale models (such as Phi-3, etc.) to reduce reliance on advanced models like GPT-3.5 and GPT-4.0, while maintaining competitiveness in AI startups and open-source projects. Meanwhile, Microsoft has established the Microsoft AI division, tasked with integrating Microsoft's consumer AI efforts with products like Copilot, Bing, Edge, and strengthening its own AI research and development capabilities.

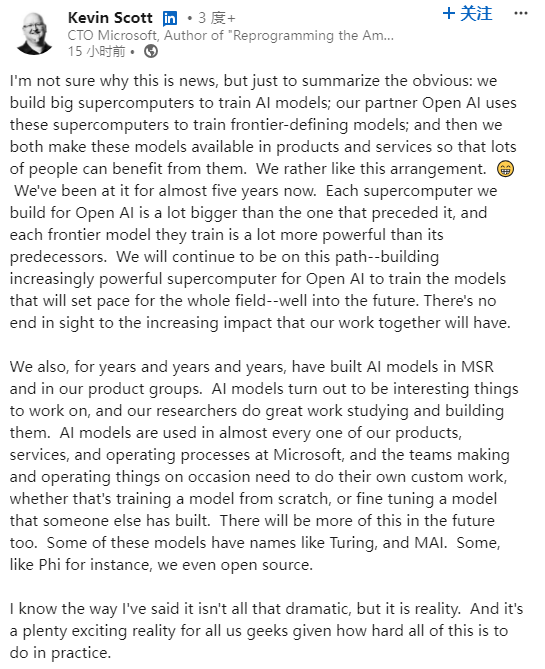

Reports suggest that Microsoft is developing a new LLM, entering a new round of competition with tech giants like Alphabet, Google, and OpenAI. The AI model, codenamed MAI-1, boasts approximately 500 billion parameters and is expected to debut at this month's Build developer conference. Subsequently, Microsoft's Chief Technology Officer, Kevin Scott, confirmed this news on his LinkedIn account.

For Microsoft, AI development adopts a "dual-track" strategy: developing small local models for mobile devices while not relenting on the development of LLMs supported by the cloud.

At the end of last month, Microsoft just launched a lightweight AI model, Phi-3-mini, with 3.8 billion parameters, trained on 33 trillion tokens, claiming performance comparable to models like GPT-3.5 and compatible with mobile devices. Importantly, besides its high response accuracy, the model's low cost makes it highly attractive to customer groups.

However, the Phi series models are trained with a maximum of 14 billion parameters. In contrast, MAI-1's scale is much larger than Microsoft's previously trained smaller open-source models, utilizing approximately 500 billion parameters, directly competing with advanced models like Google's Gemini, Amazon's Titan, and OpenAI's ChatGPT. Therefore, it will require higher computational power and more training data, making it more expensive.

The development of this model will be supervised by Mustafa Suleyman, former co-founder of Google DeepMind. In 2010, Suleyman co-founded the AI lab DeepMind with other partners, which was later acquired by Google as its AI research department. In 2022, Suleyman left Google and founded the AGI company Inflection AI.

In addition to hiring Suleyman and key members of Inflection AI, Microsoft has acquired the intellectual property of Inflection technology for $650 million. According to two Microsoft employees, "MAI-1 is different from the models previously released by Inflection, but the training process may involve its training data and technology."

To train this model, Microsoft is vigorously stockpiling computational resources, having already reserved a large number of servers equipped with Nvidia GPUs. The plan is to accumulate 1.8 million AI chips by the end of this year, and it is projected that approximately $100 billion will be spent on GPUs and data centers by the end of the fiscal year 2027.

Furthermore, the company has been compiling training data to optimize the model, including text generated by GPT-4 and various datasets from external sources such as public internet data.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.