100,000 NVIDIA H100! Musk Supercomputing Cluster Officially Launched

In May of this year, Musk swiftly initiated the construction of the cluster. For this purpose, Musk invested heavily in purchasing a large number of Nvidia "Hopper" H100 GPUs and placed a substantial order for hardware at the tech company Supermicro.

Have you seen a training cluster with 100,000 Nvidia H100s?

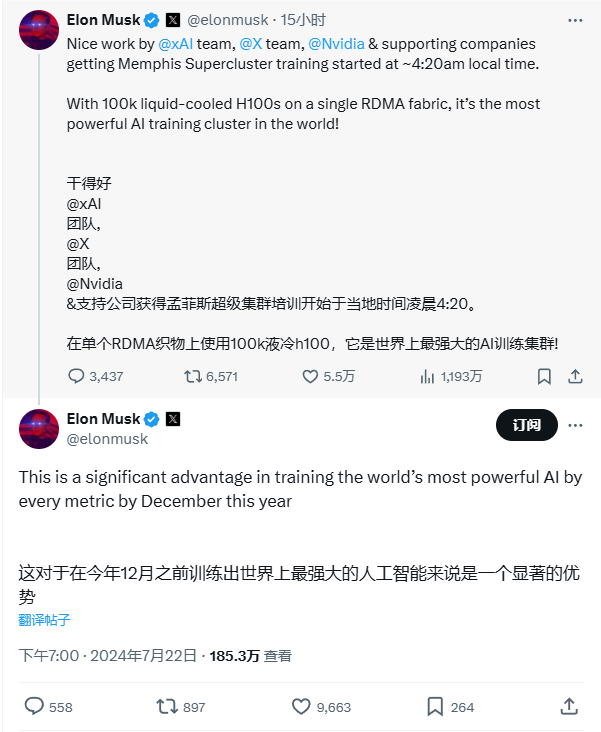

On July 23, Musk announced on social platform X that the dedicated training cluster of his xAI, the Memphis Supercluster, has officially been put into use.

According to Musk, the cluster is equipped with 100,000 Nvidia H100 GPUs, surpassing any supercomputer on the Top500 list, including the world's most powerful Frontier with 37,888 AMD GPUs.

The project's initiation dates back to March of this year. At that time, Musk wanted to use a supercomputer cluster to train Grok3, with the goal of training it into the world's most powerful artificial intelligence by the end of the year. The reason for building the cluster in Memphis is that, as the second most populous city in Tennessee, it can provide abundant electrical power (for running the cluster) and water resources (for cooling).

The CEO of Memphis Electric, Gas, and Water estimated that the supercomputer cluster may consume up to 150 megawatts of electricity per hour, equivalent to the electricity needed by 100,000 households, and the supercomputer cluster is expected to require at least one million gallons of cooling water per day.

Initially, Memphis was concerned that such a large-scale reallocation of resources would cause shortages for local residents. In exchange, Musk has verbally promised to improve Memphis's public infrastructure to support the development of the data center, including the construction of a new substation and a wastewater treatment facility. In addition, Musk also posted six job openings at the Memphis supercomputing site, including positions such as fiber optic foreman, network engineer, and project manager.

After the agreement was settled, in May of this year, Musk quickly started the construction of the cluster. For this, Musk invested heavily to purchase a large number of Nvidia "Hopper" H100 GPUs and ordered a large amount of hardware from the technology company Supermicro. It's a bit of a pity that if it weren't for the race against time, Musk could have waited for Nvidia to launch the upcoming H200, or even the Blackwell-based B100 and B200 GPUs. If these two chips were used, the computing power of the Memphis supercomputer cluster would be unimaginable.

Originally in Musk's plan, the completion of the supercomputing factory was expected before the fall of 2025. This indicates that the project completion far exceeded expectations, nearly a year ahead of schedule. Musk stated that the new Supercluster will train the world's most powerful artificial intelligence from all aspects, and the improved large language model (Grok) should complete the training phase "before December of this year."

It is understood that the Grok model is a generative artificial intelligence product of Musk's xAI, whose unique advantage is that it can understand the world in real-time through the X platform. It can also answer sharp questions that most other artificial intelligence systems refuse. At present, the Grok model is still an early test product, and it will be provided to Premium Plus users of the X platform in the United States after it is ready.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.