OpenAI spring conference hit! New model GPT-4o audio response speed comparable to human

OpenAI's new model GPT-4o can respond to input audio in just 232 milliseconds, with an average response time of 320 milliseconds, which is close to human response time!

On Monday, OpenAI's spring launch event kicked off as scheduled. As expected, OpenAI has brought new "Rocket" products.

GPT-4o stunning debut

The focus of this press conference is to launch the new flagship model GPT-4o for OpenAI.

According to OpenAI, the "o" in GPT-4o represents "omni", which OpenAI refers to as "a step towards more natural human-computer interaction." GPT-4o can accept any combination of text, audio, and image as input and generate any combination of text, audio, and image output.

Among them, the most amazing thing is that GPT-4o can respond to input audio in just 232 milliseconds, with an average response time of 320 milliseconds, which is close to human response time!

Previously, users could also converse with ChatGPT using voice mode, but due to the fact that ChatGPT supported by GPT-3.5 and GPT-4 converts audio into text and outputs a text reply, and then converts the text reply into a reply audio, this operation not only has a longer average delay time (GPT-3.5 takes 2.8 seconds, GPT-4 takes 5.4 seconds), but also misses some information. For example, previous models were unable to directly observe tones, multiple speakers, or background noise, nor could they output laughter, singing, or express emotions.

The latest GPT-4o solves the above problems, and all inputs and outputs are processed by the same neural network.

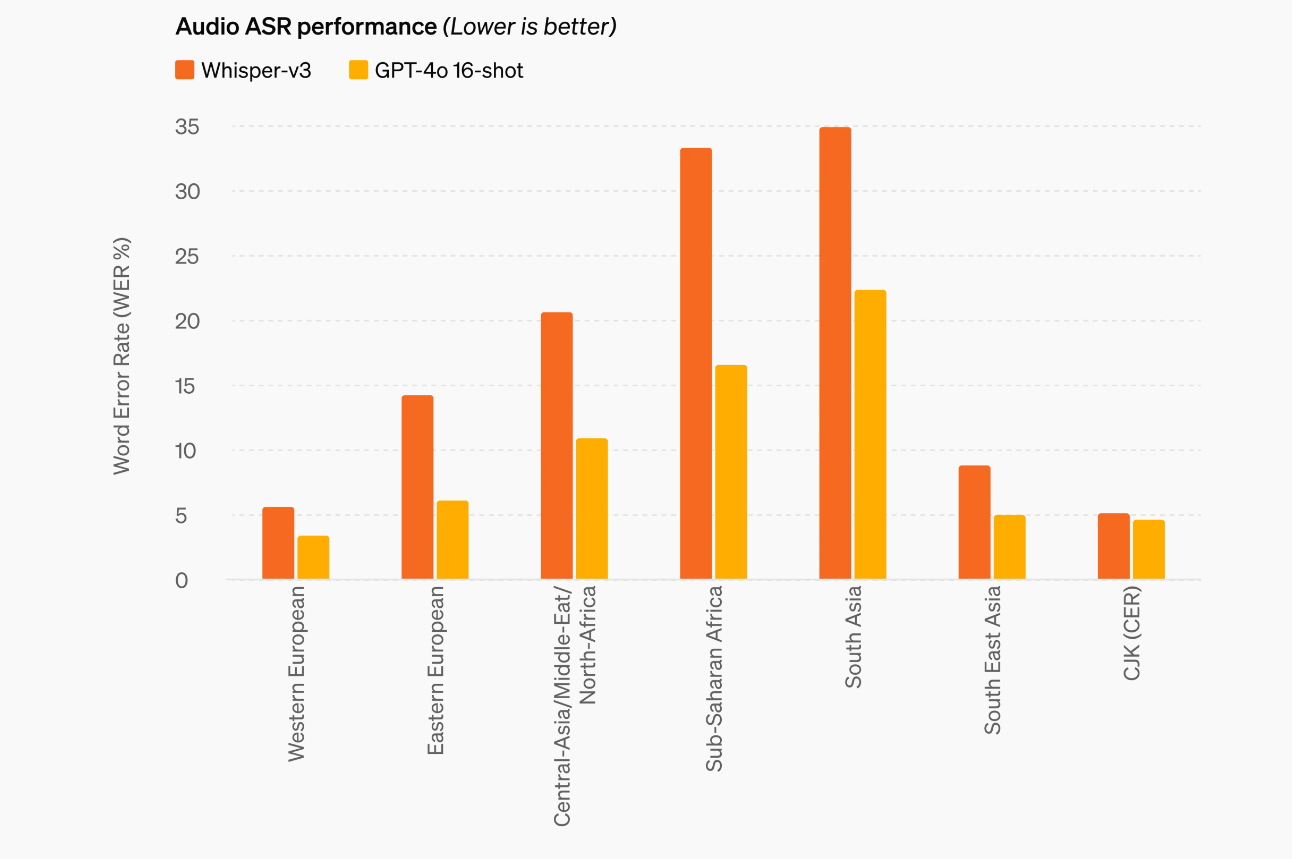

We can also see from some indicators how excellent the audio processing ability of GPT-4o is.

According to traditional benchmark tests, GPT-4o significantly improves the speech recognition performance of all languages, especially those with fewer resources, in terms of audio ASR performance compared to Whisper-v3. Whisper-v3 is a speech recognition model launched by OpenAI last year.

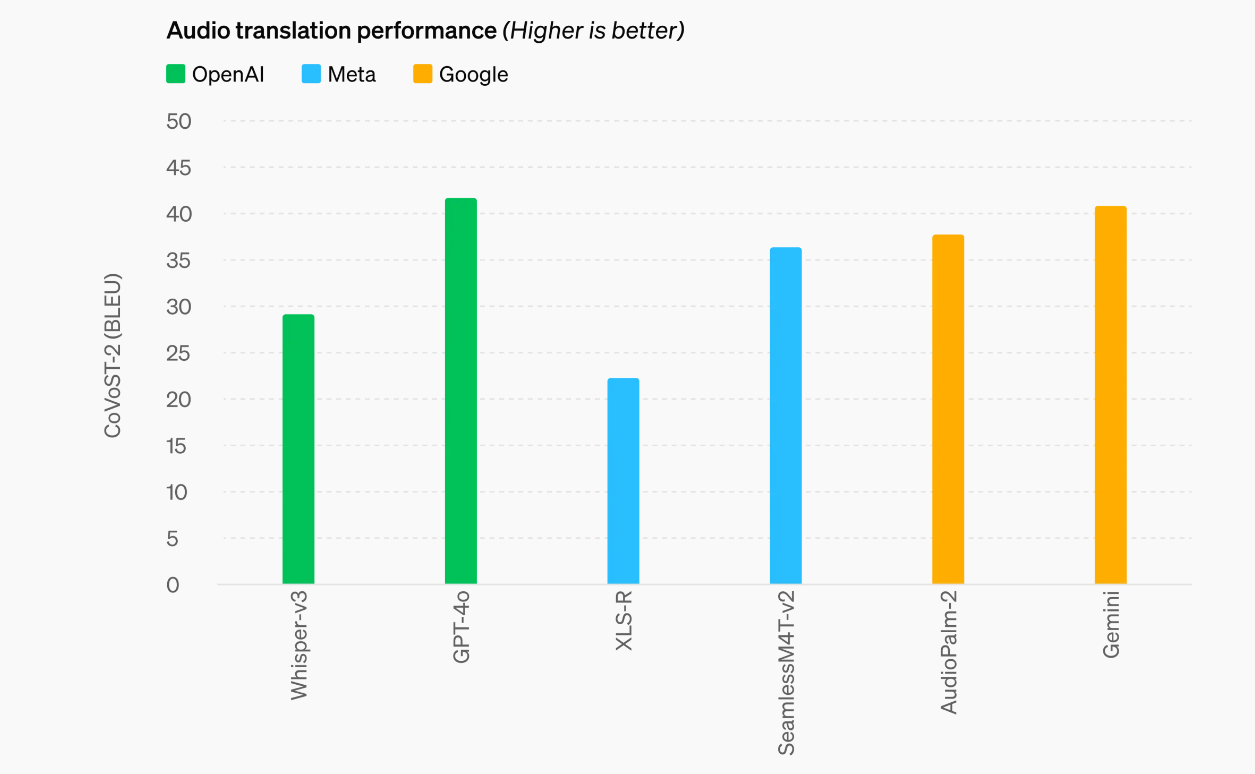

In terms of audio translation performance testing, GPT-4o has set a new benchmark for voice translation and outperformed Whisper-v3 in MLS benchmark testing.

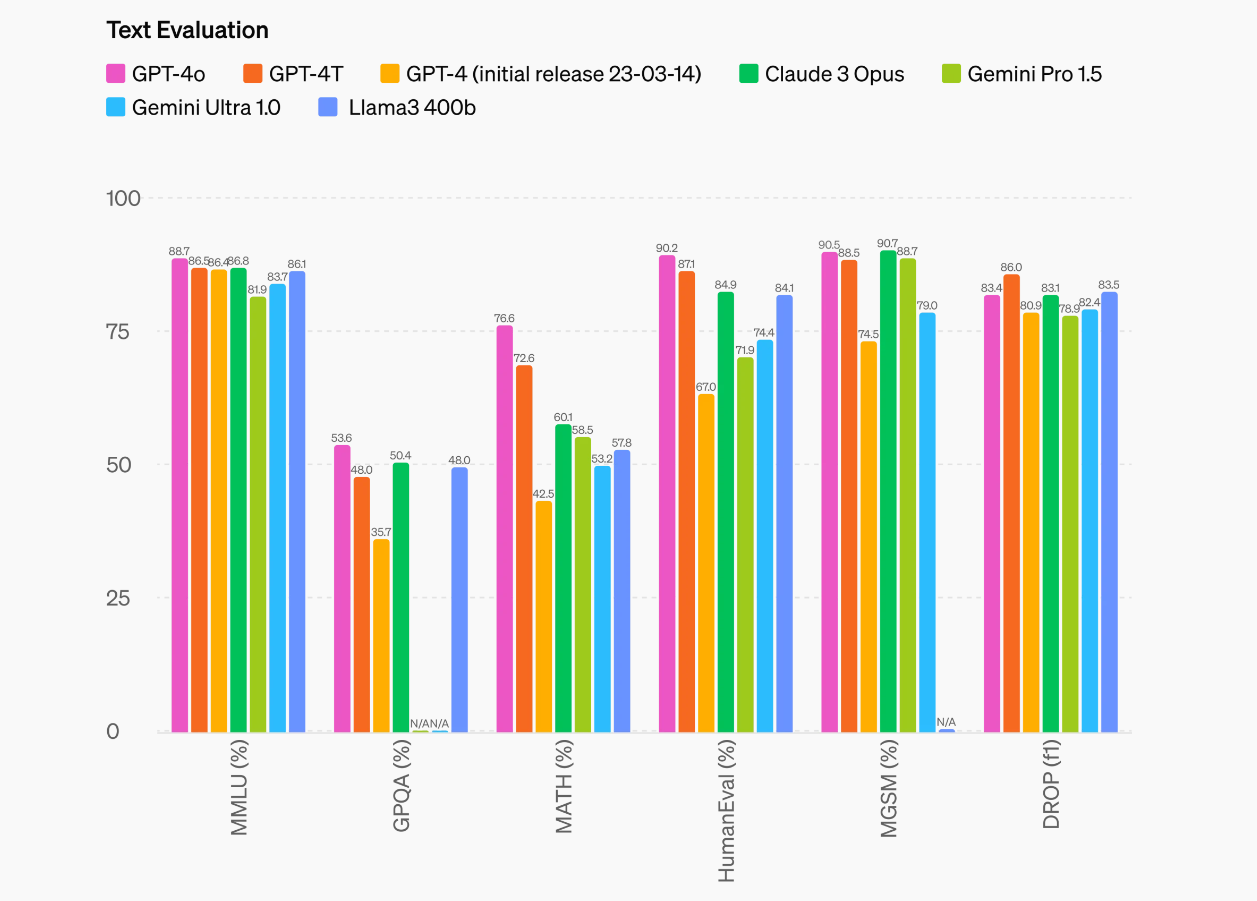

In addition to better audio processing, the performance of GPT-4o in English text and code is similar to that of GPT-4 Turbo, with a significant improvement in performance on non English text.

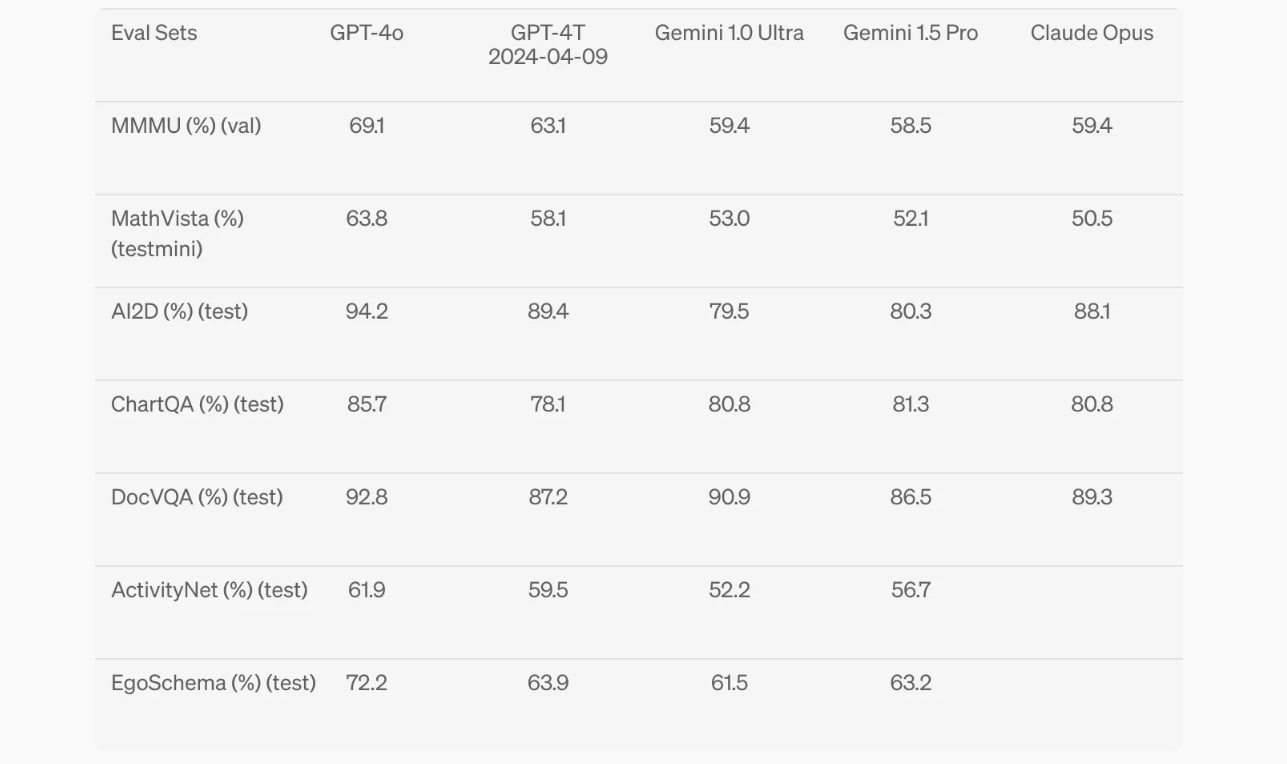

In terms of text evaluation, GPT-4o almost overwhelms a group of popular models including Claude 3 Opus, Gemini Pro 1.5, and Llama 3 400B.

In terms of visual understanding assessment, GPT-4o has also achieved leading performance.

In the press release introducing GPT-4o, OpenAI also emphasized model security. The company stated that GPT-4o incorporates security into cross pattern design by filtering training data and refining model behavior after training. OpenAI has also created a new security system to provide protection for voice output.

In the coming weeks, OpenAI will focus on technology infrastructure, post training availability, and the security required to release other modes.

After introducing GPT-4o, OpenAI also threw a heavyweight message - the text and image features of GPT-4o will be launched for free in ChatGPT on the day of the launch event! This means that both free and paid users can try it out. This move has also made OpenAI, the company name, become a real name.

However, OpenAI mentioned that free users will have usage restrictions, and when the limit is reached, ChatGPT will automatically switch to GPT-3.5. The message limit for Plus users will be 5 times higher than that for free users, while the limit for team and enterprise users will be even higher.

Developers can now access GPT-4o as text and visual models in the API. Compared to the GPT-4 Turbo, the GPT-4o has a 2-fold increase in speed, a 50% reduction in price, and a 5-fold increase in speed limit. OpenAI stated that it plans to launch support for the new audio and video features of GPT-4o to a small number of trusted partners in the API in the coming weeks.

More tools for free ChatGPT users

At this spring press conference, OpenAI also announced that it will launch more intelligent and advanced tools to free users of ChatGPT.

It is reported that when using GPT-4o, ChatGPT free users can now access the following functions: Experience GPT-4 level intelligence ; Get responses from both the model and the web; Analyze data and create charts;Chat about photos you take; Upload files for assistance summarizing, writing or analyzing; Discover and use GPTs and the GPT Store; Build a more helpful experience with Memory.

In addition, for all users, OpenAI has also launched a new ChatGPT desktop application for macOS, which aims to seamlessly integrate any operation performed on the computer. Through simple keyboard shortcuts (Option+Space), users can immediately ask ChatGPT questions. Users can also take screenshots directly in the application and discuss them.

Users can also have voice conversations with ChatGPT directly on their computer by simply clicking on the headphone icon in the bottom right corner of the desktop application to start.

The ChatGPT desktop application will be the first to be opened to Plus users and will be widely launched in the coming weeks. The Windows version of the application may not be released until later this year.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.