Don't leave your opponent alive! Nvidia launches "the world's most powerful GPU" - the H200

On Monday (November 13), Nvidia launched a new generation of artificial intelligence chip H200, and described it as "the most powerful GPU in the world."。H200 will have more memory and faster speed。

On Monday (November 13), Nvidia launched a new generation of artificial intelligence chip H200, and described it as "the most powerful GPU in the world."。

According to the official website, the H200 is based on the NVIDIA Hopper architecture and is the first GPU equipped with HBM3e.。With HBM3e, H200 at 4 per second.The 8TB speed provides 141GB of memory, compared with the A100, the capacity is almost doubled, and the bandwidth is increased by 2.4 times。Compared with the H100, the H200 has twice the capacity of the H100, and the memory bandwidth is increased by 1.4 times。The H200 is expected to start shipping in the second quarter of 2024.。

Nvidia said the H200's larger and faster memory drives the acceleration of generating artificial intelligence and large language models (LLMs), while advancing scientific computing for high-performance computing (HPC) workloads with better energy efficiency and lower total cost of ownership (TCO).。

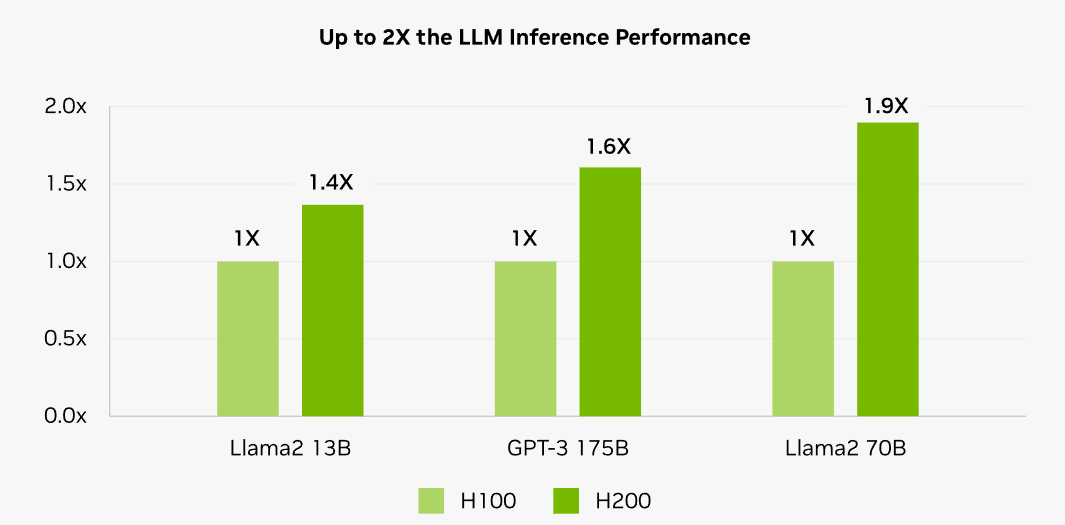

After using the H200, compared with the H100, the inference speed of Meta's Llama 2 (7 billion parameters) has almost doubled, and OpenAI's GPT-3 has increased by 1.6 times。Nvidia expects the performance of the H200 to lead further and improve with future software updates。

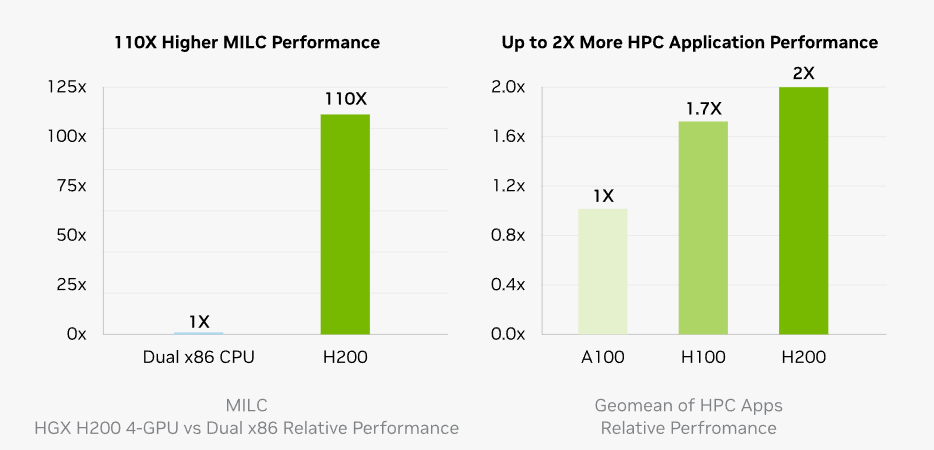

Memory bandwidth is critical for HPC applications because it enables faster data transfer and reduces complex processing bottlenecks。For memory-intensive HPC applications such as simulation, scientific research and artificial intelligence, the higher memory bandwidth of the H200 ensures efficient access and manipulation of data, resulting in 110 times faster results than ordinary CPUs。

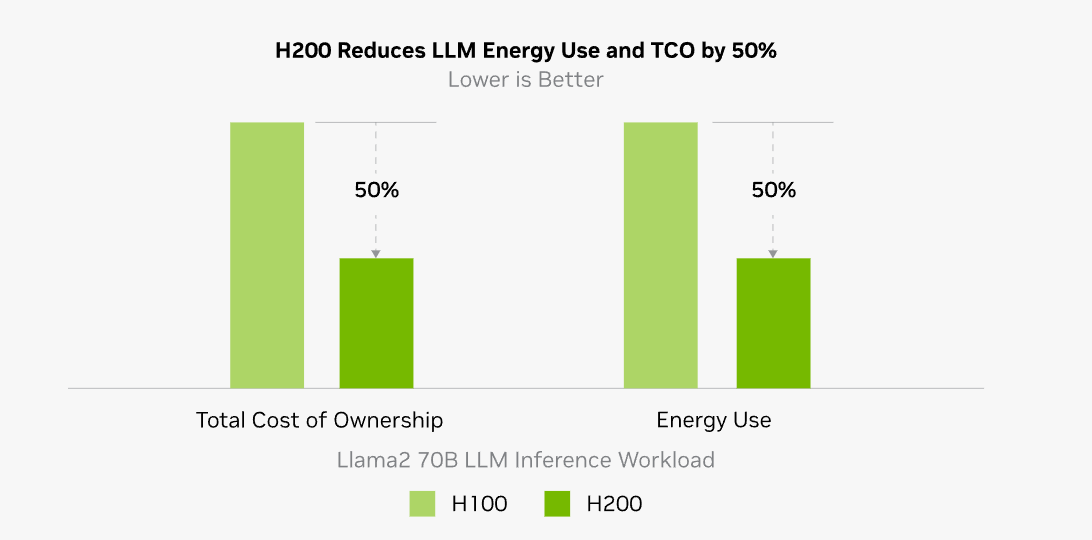

Not only that, but the H200 can reduce LLM's total cost of ownership and energy consumption by 50% each compared to the H100。

Nvidia also said that H200 will provide Nvidia HGX H200 server board, the relevant configuration can be compatible with the HGX H100 system hardware and software。In this case, Nvidia partners such as Asus, Dell, HP, Lenovo, Wistron, etc. can use H200 to update their existing systems。

Ian Buck, Nvidia's vice president of Hyperscaler and HPC, said: "To train generative artificial intelligence and HPC applications, you must use high-speed and large-memory GPUs to process large amounts of data.。"。"With the H200, the industry-leading AI supercomputing platform solves some of the world's most important challenges faster."。"

In addition, NVIDIA revealed that starting next year, Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be the first cloud service providers to deploy H200.。

Nvidia's move is even more stressful for its competitors。AMD is developing the latest chip MI300 will be officially listed at the end of the year。AMD previously said the MI300 had more memory than its predecessor, allowing it to install large models on hardware to run inference.。

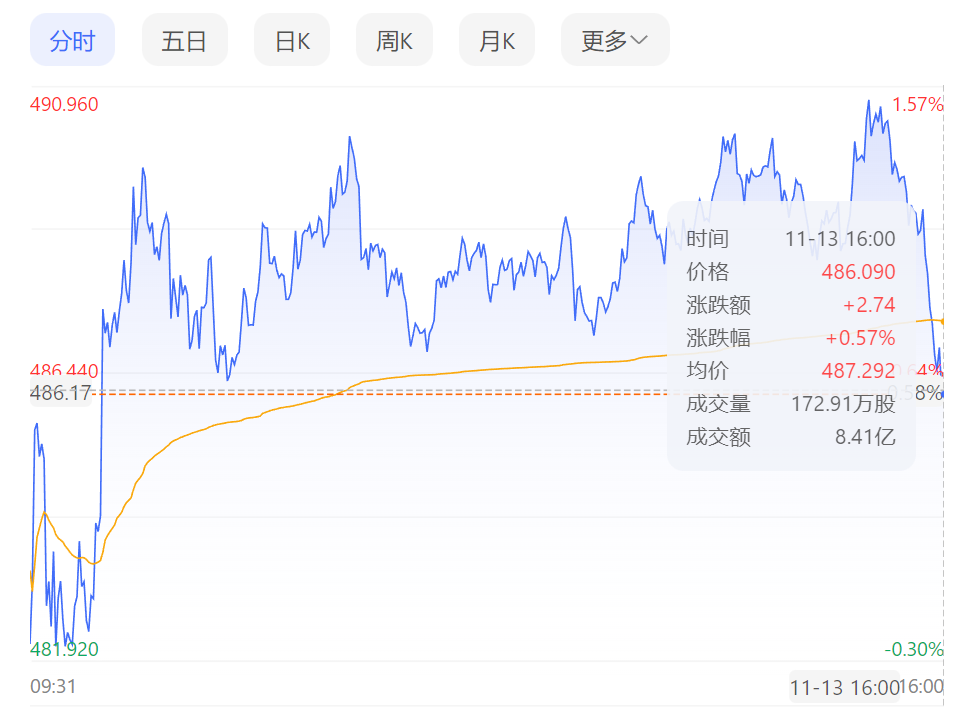

Boosted by the launch of a new generation of chips, Nvidia rose as high as 490 in U.S. stocks on Monday.96 dollars, close to the all-time high of 493 set on August 31 this year.55美元。

In addition, Nvidia will report its third-quarter earnings on November 21.。The market expects its third-quarter revenue to reach about $16 billion, up 170% year-over-year.。

In multiple positive holdings, the stock has risen for nine consecutive trading days, and "nine consecutive Yang" let the company's shares rose nearly 20%。Since the beginning of the year, Nvidia's shares have risen more than 230%, making it one of the best performing stocks in the S & P 500.。

Regarding the question of whether the H100 will be "eliminated" as a result, Nvidia spokesman Kristin Uchiyama said that the launch of the H200 will not affect the production of the H100.。"You will see our overall supply increase throughout the year and we will continue to supply for the long term."。"

In August, media reported that Nvidia plans to triple H100 production in 2024.。The goal is to increase production from about 500,000 in 2023 to 2 million next year.。Since the OpenAI burst in October last year, the popularity of artificial intelligence has only increased, and the market demand for chips has been very strong, so it is not difficult for Nvidia to achieve its goal.。

In addition to Nvidia, the AI boom has made many chip manufacturers ambitious, hoping to occupy more market share in this new "blue ocean"。

In a note to customers, Chris Caso of Wolfe Research wrote that Nvidia does not typically update its data center processors, and the release of the H200 is further evidence that Nvidia has accelerated its product rhythm in response to the growth and performance requirements of the artificial intelligence market, which will further consolidate its competitive "moat."。

In October, Nvidia told investors that the pace of the company's releases would accelerate due to strong demand for its GPUs。The company may release a B100 chip based on the upcoming Blackwell architecture next year.。

Nvidia did not disclose the cost and selling price of the H200.。Caso believes that the company may increase the price given the improved performance of the new version。

According to Raymond James's estimates, the cost of the H100 chip is between $25,000 and $40,000, and in large model training, it usually takes thousands of chips to achieve better results。

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.