NVIDIA announces AI Foundry service: customizing exclusive super models for customers

The goal of DGX™ Cloud AI is to build a new type of public cloud, greatly reducing the convenience of infrastructure - through a coordinated platform, making it as easy for users to access AI computing resources "as turning on a light."

On July 24th, chip giant NVIDIA announced the launch of the brand-new NVIDIA AI Foundry service and NVIDIA NIM inference microservices. The outstanding feature of these two services is that users from various countries can pair with Meta's newly released Llama 3.1 large model to build "super models" that meet their own needs.

For instance, medical companies require AI models to understand medical terminology and practices, while financial companies need AI models with expertise in the financial field. In this case, the aforementioned companies can use NVIDIA's AI Foundry to build generative AI applications "with domain-specific knowledge and localized characteristics."

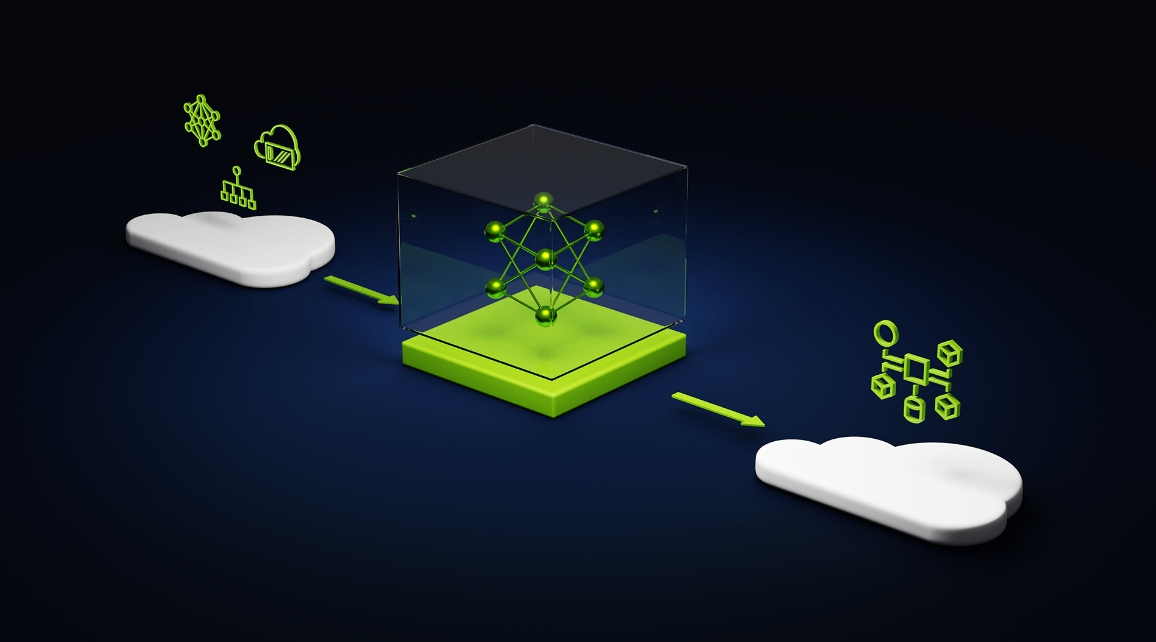

Specifically, the NVIDIA AI Foundry service is supported by the NVIDIA DGX™ Cloud AI platform, which is co-designed by the world's leading public cloud companies. The purpose is to provide enterprises with a large amount of computing power support and to easily expand according to the changes in AI needs. It can be said that the DGX™ Cloud AI platform is born for model customization.

It is understood that a major challenge of the current public cloud infrastructure is that both software and hardware are built for applications and services of the web era, which cannot well meet the needs of AI work (node-to-node, data, and user locality). AI Foundry has long realized that the bottleneck of the ecosystem is not a shortage of supply, but an underutilization.

Based on this, the goal of DGX™ Cloud AI is to build a new type of public cloud, greatly reducing the convenience of infrastructure - through a coordinated platform, making it as easy for users to access AI computing resources "as turning on a light."

As a service model that supports "customization," NVIDIA has embedded native AI models such as Nemotron and Edify in the NVIDIA AI Foundry. They, together with NVIDIA NeMo™ (custom model software) and NVIDIA DGX™ Cloud (providing cloud capacity), form the foundation of AI Foundry, responsible for the basic computational architecture of the model.

As for NVIDIA NIM, its positioning is an accelerated inference microservice that can provide a basic environment suitable for AI Foundry operations. After installing the NVIDIA NIM service, relevant companies can run customized models on NVIDIA GPUs in any place such as cloud computing, data centers, workstations, and PCs.

Currently, the NVIDIA NIM service is fully adapted to Meta's newly released Llama 3.1 large model. Data shows that the inference efficiency of NIM with Llama 3.1 is 2.5 times higher than without NIM. Experts say that to achieve the best operating effect, companies can combine the Llama 3.1 NIM microservice with the NVIDIA NeMo Retriever NIM microservice to create the most advanced retrieval pipeline.

Public information shows that Llama 3.1 is a series of generative AI models provided by Meta. These models are open source and can be used by enterprises and developers to build advanced generative AI applications. The Llama 3.1 model includes scales of 800 million, 7 billion, and 40.5 billion parameters, trained by more than 16,000 NVIDIA H100 Tensor Core GPUs, and is optimized for data centers, cloud, and local devices.

At present, some large companies have started to use NVIDIA AI Foundry to complete their customized models, and Accenture, a global professional services company, is one of them. The company said that generative AI is changing various industries, and enterprises are eager to deploy applications driven by customized models. With the help of NVIDIA AI Foundry, we can help customers quickly create and deploy customized Llama 3.1 models to promote transformative AI applications.

NVIDIA's founder and CEO, Huang Renxun, said that Meta's public Llama 3.1 model is a key moment for global enterprises to adopt generative AI. Llama 3.1 opens the door for every enterprise and industry to make the most advanced generative AI applications. NVIDIA AI Foundry fully integrates Llama 3.1, ready to help enterprises build and deploy customized Llama super models at any time.

The parent company of the Llama 3.1 large model - Meta's founder and CEO, Zuckerberg, said: "The new Llama 3.1 model is an extremely important step for open-source AI. With the help of NVIDIA AI Foundry, companies can easily create and customize the most advanced AI services that people want, and can also be deployed through NVIDIA NIM. I am very pleased to hand this over to everyone."

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.