How To Regulate AI? OpenAI Supports Watermarking, Musk Advocates For Enhanced Safety Testing

According to media reports, OpenAI, Adobe and Microsoft, among others, have expressed support for California's AB 3211, which would require “watermarking” of AI-generated content.

With the rapid development of artificial intelligence, the discussion of AI regulation is heating up. California, USA, as the incubator of many AI companies, has seen a proliferation of local proposals for AI regulation. However, different companies have different views on the intensity of AI regulation.

OpenAI, Microsoft and others support “watermarking” of AI content

According to media reports, OpenAI, Adobe and Microsoft, among others, have voiced their support for California's AB 3211, the California Digital Content Provenance Standards.

AB 3211 requires tech companies to add watermarks to the Metadata of AI-generated photos, videos and audio clips. Currently, a number of AI companies already do this, but most people don't read the Metadata. Therefore, AB 3211 also requires large online platforms (such as Instagram or X) to tag AI-generated content in a way that is understandable to the average viewer.

The bill also mentions that starting in 2026, large online platforms would be required to produce an annual transparency report on the review of deceptively synthesized content on their platforms.

OpenAI, Adobe and Microsoft are all members of the Coalition for Content Provenance and Authenticity, which helped create C2PA metadata, a widely used standard for tagging AI-generated content.

A trade group representing Adobe, Microsoft and the largest U.S. software makers had opposed AB 3211 in April, calling the bill “unworkable” and “unduly burdensome” in a letter to California lawmakers. However, amendments to the bill appear to have changed their minds.

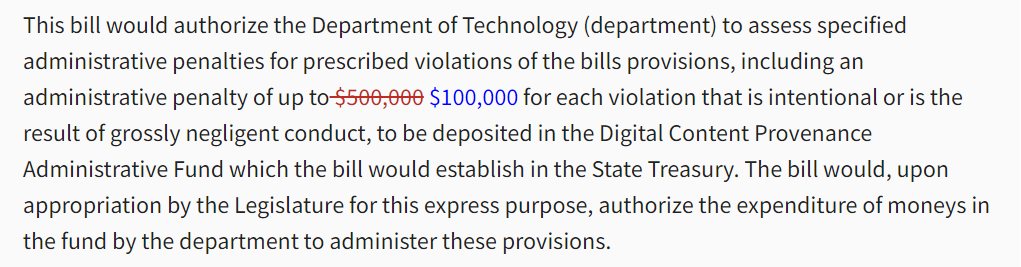

As seen in the amendments to AB 3211, the amount of the administrative penalty has been changed. While the original bill stated that the department would impose an administrative penalty of up to $500,000 for each willful or grossly negligent violation, the amendment changes the maximum administrative penalty to $100,000.

Musk backs tougher safety tests for AI

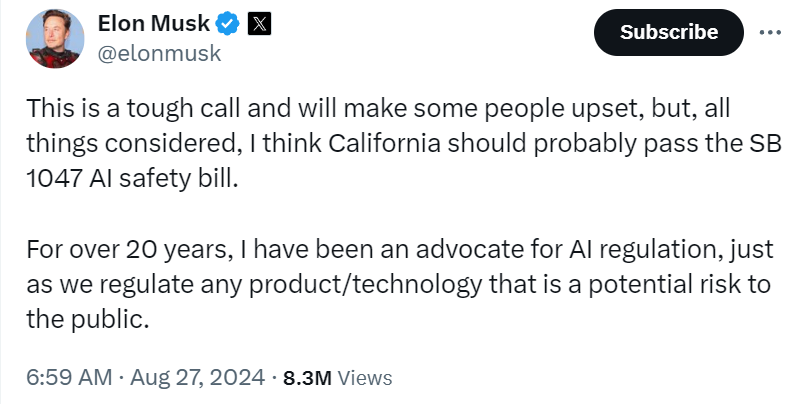

Meanwhile, Musk, the founder of AI company xAI, posted on X to express his support for another California bill on AI, SB 1047, the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. Artificial Intelligence Models Act).

“This is a tough call and will make some people upset, but, all things considered, I think California should probably pass the SB 1047 AI safety bill.” Musk said, “For over 20 years, I have been an advocate for AI regulation, just as we regulate any product/technology that is a potential risk to the public.”

SB 1047 was introduced in February by California Senator Scott Wiener, and the bill mentions that it would impose strict safety protocols on AI companies.Wiener has said that the goal of the bill is to “establish clear, predictable, common-sense safety standards for developers of the most powerful and large-scale artificial intelligence systems to ensure the safe development of large-scale safe development of artificial intelligence systems.”

SB 1047 would apply to companies that develop models that spend more than $100 million to train or use high levels of computing power. These companies would be required to test new technologies for safety before using them publicly, and would have to build “complete shutdown” features into their models and take responsibility for all applications of the technology.

The bill also sets legal standards for AI developers, and the California Attorney General can prosecute companies for “unlawful conduct. The bill also creates protections for whistleblowers and establishes a commission to set computational limits for AI models and issue regulatory guidance.

SB 1047 is one of the most polarizing AI policy proposals in the United States. The bill has been heavily criticized by tech giants such as Meta and OpenAI, who argue that the bill is vague, burdensome, and would have a chilling effect on open source models.

Meta argued that the bill would prevent the release of open source AI because “it is impossible to open source models without incurring legal liability that cannot be afforded. ”Meta also said that it would likely have an impact on the small business ecosystem, decreasing their use of open source models to create new jobs, businesses, tools, and services Likelihood.

According to the California Legislative Database, California lawmakers attempted to introduce 65 bills dealing with AI this legislative season, including measures to ensure that all algorithmic decisions are unbiased and measures to protect the intellectual property of deceased individuals from being exploited by AI companies. However, many of the bills have been aborted.

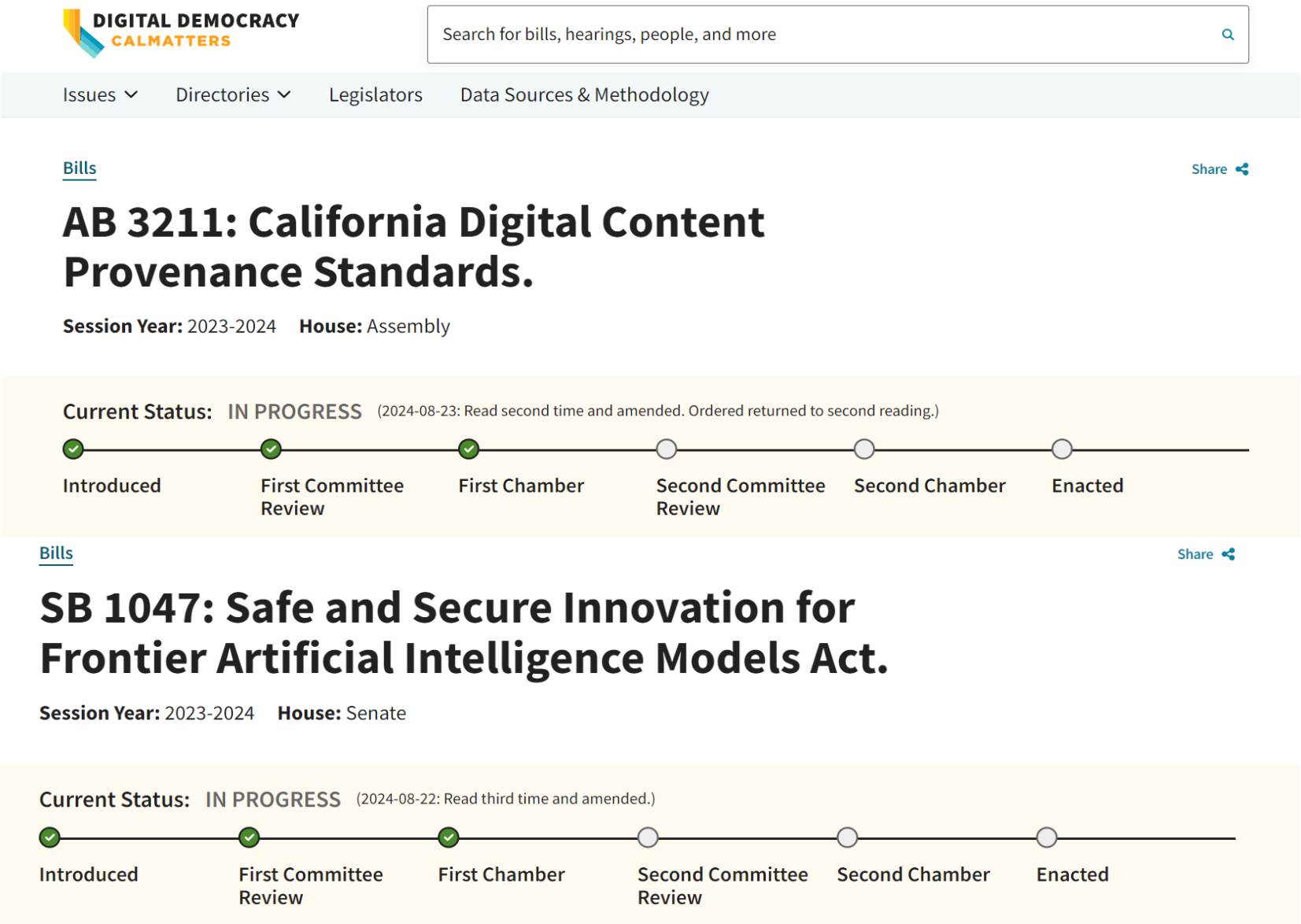

The aforementioned AB 3211 and SB 1047 have received more attention due to their support from tech giants, and both bills are currently in a “work in progress” status, with a final vote on both expected in the California State Assembly at the end of this month.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.