Parameters up to 30 billion! Apple's latest multi-modal large model MM1 exposed

Last week, Apple's research team in arxiv.org published a research paper on multi-modal large models, which is an important breakthrough in Apple's AI research field。

Apple, which has been "silent," suddenly broke out that it has made a major breakthrough in artificial intelligence research.。

Multi-modal large model MM1

Last week, Apple's research team in arxiv.Org published a research paper entitled "MM1: Methods, Analysis & Insights fromMultimodal LLM Pre-training"。

In the abstract of the paper, Apple's research team stated: "We demonstrated that for large-scale multimodal pre-training, the use of a careful combination of image captions, interleaved image text, and plain text data is essential to achieve state-of-the-art few-shot results on multiple benchmarks.。"

The summary also mentions: "Thanks to large-scale pre-training, MM1 has attractive features such as enhanced context learning and multi-image reasoning, which can achieve a small number of thought chain prompts。This demonstrates its ability to perform multi-step inference on multiple input images using a small number of "mind-chain" cues, and also means that its large multi-modal model has the potential to solve complex, open-ended problems that require basic language understanding and generation.。

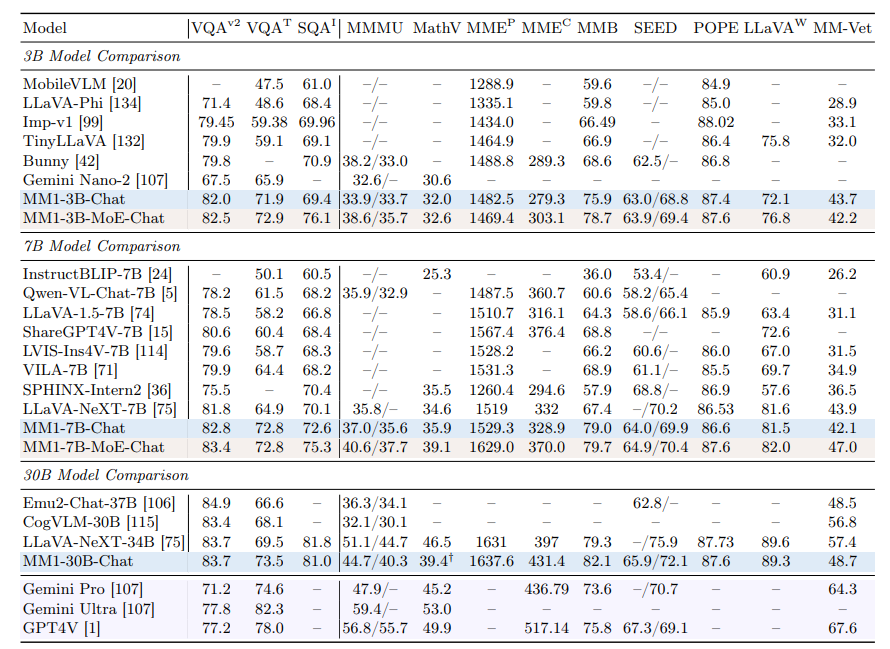

According to the paper, MM1 has three sizes: 3 billion, 7 billion and 30 billion parameters。Researchers use these models to conduct experiments to identify key factors affecting performance。

Interestingly, image resolution and the number of image labels have a greater impact than visual language connectors, and different pre-training data sets can significantly affect the validity of the model。"We show that the image encoder, image resolution, and image tag count have a significant impact, while the importance of visual language connector design is relatively small.。"

The research team carefully constructed MM1 using the "Mixture of Experts" architecture and the "Top-2 Gating" method。This approach not only produces excellent results in pre-training benchmarks, but also translates into strong performance on existing multi-mode benchmarks。MM1 model maintains competitive performance even after fine-tuning for specific tasks。

"By extending the approach presented, we established MM1, a family of multi-modal models with up to 30 billion parameters, consisting of dense models and hybrid expert model (MoE) variants, which reached the highest level on pre-training metrics and achieved competitive performance after supervised fine-tuning on a set of established multi-modal benchmarks.。"

Tests show that the MM1-3B-Chat and MM1-7B-Chat models outperform most similarly sized competitors on the market。These models are particularly good at tasks such as VQAv2 (image and text-based question answering), TextVQA (image-based text question answering), and ScienceQA (scientific question answering)。

However, the overall performance of MM1 has not yet fully surpassed top global models such as Google's Gemini or OpenAI's GPT-4.。While MM1 may not be the absolute leader yet, it's still a major leap forward for Apple in AI.。

As Apple researchers put it, MLLM (Multimodal Large Language Model) has become "the next frontier of fundamental models" after traditional LLM (Large Language Model), and they "achieve superior functionality."。

Apple's "struggling to catch up"

The MM1 study comes as Apple has been ramping up its investment in artificial intelligence to catch up with tech players such as Google, Microsoft and Amazon.。These companies have already taken the lead in integrating generative AI capabilities into their products, compared to Apple's delay in producing competitive results.。

Sources say Apple is working on a large language model framework called "Ajax," as well as a chatbot internally called "Apple GPT."。The goal is to integrate these technologies into Siri, Message, Apple Music, and other apps and services。For example, AI can be used to automatically generate personalized playlists, assist developers in writing code, or engage in open-ended conversations and task completion.。

"We see AI and machine learning as foundational technologies that are an integral part of almost every product we launch.。Apple CEO Tim Cook told analysts on a recent earnings call, "I'm not going to go into detail about what it is... but certainly we're going to invest, we're going to put a fair amount of money into it, we're going to do it responsibly, and over time you're going to see advances in the product, and those technologies are at the heart of the product."。"

He added: "We are excited to share details of our ongoing AI work later this year.。As a result, many have speculated that Apple is likely to unveil new AI features and developer tools at its Worldwide Developers Conference in June.。

At the same time, smaller AI advances such as Keyframer animation tools and performance enhancements from Apple Research Labs also indicate that Apple is making silent progress.。

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.