Baidu Hou Zhenyu: Big Model Reconstruction Cloud Computing Welcomes AI Ecological Key Year

AI-driven big models are driving innovation and revolution in the cloud computing industry, and the infrastructure system for big models urgently needs to be restructured to support the development of AI-native applications.。

At the end of the year, the topic of artificial intelligence (AI) big model is hot.。

On December 20, the 2023 Baidu Cloud Intelligence Conference - Smart Computing Conference was held in Beijing, with the theme of "Reconstructing Cloud Computing - Cloud for AI," focusing in depth on the changes in cloud computing caused by large models.。At the meeting, Hou Zhenyu, Vice President of Baidu Group, delivered a speech on the theme of "Big Model Reconstructing Cloud Computing."。

We know that AI-driven big models are driving innovation and revolution in the cloud computing industry, so the infrastructure for big models needs to be fully refactored to support the development of AI-native applications.。

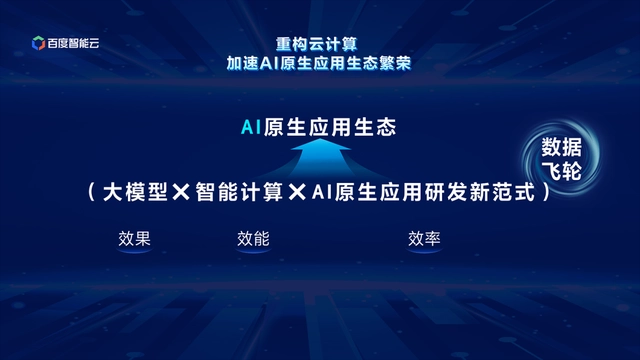

In general, building a thriving AI-native application ecosystem requires three complementary elements: large models, intelligent computing power, and a new paradigm for AI-native application development.。The big model is the "brain" of AI native applications, and intelligent computing provides solid support for the operation of AI native applications. The new R & D paradigm helps developers to efficiently develop applications based on the capabilities of the big model.。

In addition, the data flywheel is a sufficient and necessary condition for successful AI native applications, allowing large model capabilities to iterate at high speed and product experience to continue to improve.。

In the AI era, the three parallel development lines of application technology, AI technology and IT infrastructure finally converge.。Hou Zhenyu said: "The big model refactoring cloud computing is mainly reflected in three levels - AI native cloud will change the landscape of cloud computing, model as a service (MaaS) will become a new basic service, AI native applications will give birth to a new research and development paradigm.。"

First, the calculation layer: the calculation of the effectiveness of a significant jump.

The transformation of cloud computing in the AI era is how to make more efficient use of models as core capabilities.。In the underlying cloud infrastructure layer, from Internet applications to mobile Internet applications, the bottom layer is based on central processing unit (CPU) computing chips, and AI applications on graphics processing unit (GPU) or heterogeneous computing demand has increased significantly, the cloud market's underlying computing power began to migrate to GPU-based.。

According to the data, in the third quarter of 2023, Nvidia's operating income has surpassed Intel, and Nvidia's latest market capitalization has exceeded Intel's $1 trillion, and future GPU growth will be much greater than CPU growth.。

In this trend, Baidu released the Baige Heterogeneous Computing Platform 3.0, intelligent network platform, and Baidu cloud native database GaiaDB 4..0。

Among them, Baige Heterogeneous Computing Platform 3.0 The effective training time of the cluster has been increased to 98%, and the effective utilization rate of network bandwidth has been increased to 95%, so as to support the ultra-large-scale AI cluster computing at the level of ten thousand cards; the intelligent computing network platform supports the flexible allocation of computing resources of Baidu and third-party intelligent computing nodes; GaiaDB 4.0 mainly realizes cross-machine query of data, and introduces column storage index (supports efficient retrieval of small-scale data) and column storage engine (maximum support for complex analysis of PB-level data) for different workloads, which can improve the query speed of different scale data.。

Second, the model layer: the rise of general-purpose MaaS.

MaaS (Mobile as a Service) will significantly lower the threshold for Al to land and achieve true Al inclusion, and the new IT infrastructure it relies on will further disrupt the existing cloud computing market landscape at the bottom.。

In order to meet the needs of large model landing, Baidu is reconstructing cloud computing services based on the "cloud intelligence integration" strategy, and has completed the end-to-end upgrade and reconstruction from the underlying infrastructure to the development and application of large models, and then to the development of AI native applications.。

Among them, Baidu has made some upgrades to the Qianfan model platform: the number of prefabricated basic / industry models has increased to 54; the release of data statistical analysis, data quality inspection and other functions; the introduction of automated + manual dual model evaluation mechanism; the introduction of flexible pricing methods such as batch calculation.。

Since August 31, after the full opening of the heart, "Qianfan" API (application program interface) daily calls to achieve a 10-fold increase, customers mainly from the Internet, education, e-commerce, marketing, mobile phones, automobiles and other industries.。

Third, the application layer: the development paradigm completely subverted

Hou Zhenyu said that the unique ability of large models to understand, generate, logic, and remember will give birth to a new paradigm of AI native application development, and the entire application technology stack, data flow, and business flow will be changed.。Enterprises can use scenario data to fine-tune their own large models directly on top of the basic large models, and then use the model capabilities to design AI native applications without training from scratch.。With the expansion of enterprise business, gradually accumulate more competitive scenario data, and then feed back the model, improve the application effect.。

For a long time, Baidu Intelligent Cloud has been taking "cloud intelligence integration" as its core strategy, comprehensively reconstructing cloud computing products and technology systems, and then accelerating the prosperity of AI native application ecology through the feedback capability of the entire Baidu Intelligent Cloud.。Hou Zhenyu said that only in the actual scene of the industry customers landing, there are more AI native applications, in order to build a real application ecology, in order to make full use of the value of the big model.。

From now on, Baidu has opened the AI native application development workbench "Qianfan AppBuilder" to all users.。

AppBuilder's services are mainly composed of component layer and framework layer.。At the component layer, AppBuilder provides multi-modal AI capability components (e.g., text recognition, Wensheng diagrams, etc.), capability components based on large language models, and infrastructure components (e.g., vector databases, object storage).。At the framework layer, AppBuilder provides AI-native application frameworks such as Retrieval Enhanced Generation (RAG), Agent (Agent), and Intelligent Data Analysis (GBI).。

For developers with different technical backgrounds, AppBuilder provides two products: low code and code.。Low-code products are suitable for developers with non-technical backgrounds and general development needs. Users can customize and launch AI applications with a simple click.。For users with deep development needs, AppBuilder also provides a variety of coded development kits and application components, including SDKs, development environments, debugging tools, sample code, and more.。

Conclusion

For Hou Zhenyu, 2024 is a key year for the development of AI ecology, and he compares 2023 to the first year of model training, and AI empowerment applications will be gradually launched next year.。He expects an explosion of AI native applications next year, so the optimization of the computational reasoning layer and model training tool chain will be the focus of the year.。

Baidu founder, chairman and CEO Robin Li believes that the thriving AI native application ecology can drive economic growth。In October this year, Baidu Smart Cloud launched the first full-link ecological support system for large models in China, providing partners with all-round support including empowerment training, AI native application incubation, sales opportunities, marketing, etc., and is committed to the prosperity of AI native application ecology.。

In the future, Baidu Smart Cloud will continue to launch competitive product solutions, and work with partners to deepen customer application scenarios, so that more AI native application innovations emerge.。

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.