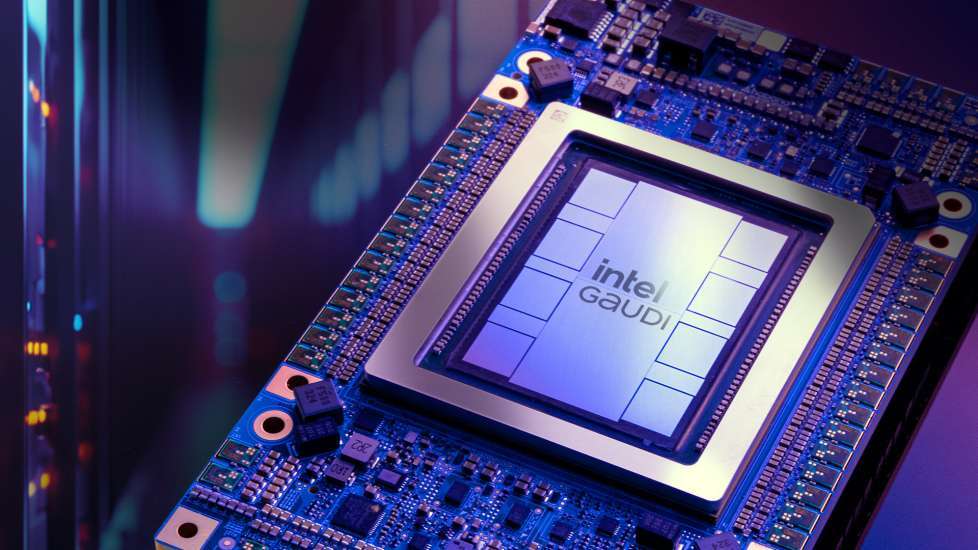

Gaudi 3, Intel's sharp weapon against NVIDIA?

On 9 April, Intel officially released its third-generation AI accelerator, Gaudi 3, with an average 50% increase in inference and an average 40% increase in energy efficiency compared to NVIDIA's H100.

On April 9th, Intel officially unveiled the third-generation AI accelerator Gaudi 3 at the Intel Vision 2024 Industry Innovation Conference. It is expected to be supplied to server manufacturers such as Supermicro and Hewlett Packard Enterprise in 2024Q2, with a full launch in Q3.

Introduced as aiming to facilitate the transition of AIGC from experimentation to application, Gaudi 3 boasts advantages in high performance, practicality, energy efficiency, and rapid deployment, fully mee

ting the demands of AI applications for complexity, cost-effectiveness, and compliance.

Intel CEO Pat Gelsinger stated that earlier versions of Gaudi fell short of Intel's market share expectations, but the new version may have a greater impact and directly benchmarks against Nvidia's H100 and H200, although specific prices were not disclosed.

Is Gaudi 3 the outperformer?

It is understood that Gaudi 3 is largely similar to Gaudi 2 but has undergone improvements in architecture, platform, and other aspects, with AI computing performance reaching 2-4 times that of its predecessor.

Specifically, Gaudi 3 utilizes TSMC's 5nm process technology and supports 128GB HBMe2 memory. Compared to the previous generation product, Intel's Gaudi 3 brings a 400% increase in BF16 AI computing power, 1.5 times the memory bandwidth, and a 200% increase in network bandwidth.

In terms of specific performance, the chip aims to enhance performance in two key areas: training artificial intelligence systems and running finished software.

Currently, Gaudi 3 has been tested on Llama and can be used to assist in training or deploying large AI models, including Stable Diffusion and OpenAI's Whisper speech recognition model.

In AI model compute power, compared to Nvidia's H100, Gaudi 3's inference capability on the Llama 2 model has increased by an average of 50%, with an average energy efficiency improvement of 40%, and AI model execution speed is 1.5 times faster, at only a fraction of the cost of the H100. Compared to Nvidia's latest model, the H200, Gaudi 3 also achieves approximately a 30% increase in inference speed.

Over the past year, Nvidia has held approximately 80% market share in the AI chip market with its GPUs, being preferred high-end chips by AI enterprises. Last month, Nvidia introduced successors to the H100, B200 and GB200, at its GTC conference.

Until now, Nvidia remains the leader in the AI chip market. However, as cloud service providers and enterprises establish infrastructure for deploying AI software, more competitors are entering the market. Moreover, more companies are seeking lower-cost suppliers, making Nvidia not unassailable.

After Gaudi 3 joining this game, the market now presents a competitive landscape with Nvidia's B200, AMD MI300, and Intel's Gaudi 3.

Das Kamhout, Vice President and Senior Chief Engineer of Intel's Cloud and Enterprise Solutions Division, stated in a conference call, "We expect Gaudi 3 to compete well with Nvidia's latest chips. With its highly competitive price, unique open integrated chip network, and industry-standard Ethernet, it is undoubtedly a powerful product."

Collaboration strengthens AI Ecosystem

It is undeniable that Nvidia's success is closely tied to its CUDA ecosystem, where AI professionals can access all hardware features within the GPU. In response, Intel has developed a strategy for a series of open and scalable AI systems, providing solutions to meet specific AIGC requirements of enterprises.

The company has shared its Intel Gaudi accelerator solutions with enterprise customers and partners across various industries, paving the way for innovative AIGC applications: several enterprises such as NAVER, Bosch, IBM, Seekr, Landing AI, Roboflow, and Infosys have partnered with Intel, achieving mutual benefits in AI applications.

Additionally, Intel now collaborates with Anyscale, Articul8, DataStax, Domino, Hugging Face, KX Systems, MariaDB, MinIO, Qdrant, RedHat, Redis, SAP, VMware, Yellowbrick, and Zilliz to develop non-proprietary open software, enhancing AI application deployment convenience to the fullest extent through RAG technology, enabling enterprises to leverage a large number of existing proprietary data sources running on standard cloud infrastructure through open LLM features.

Sachin Katti, Senior Vice President of Intel's Network Group, stated, "We are collaborating with the software ecosystem to develop open-source software and modules, enabling users to integrate the solutions they need without having to repurchase solutions."

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.