"Text-To-3D" Faces Newcomer: Meta's 3DGen Leads the Way

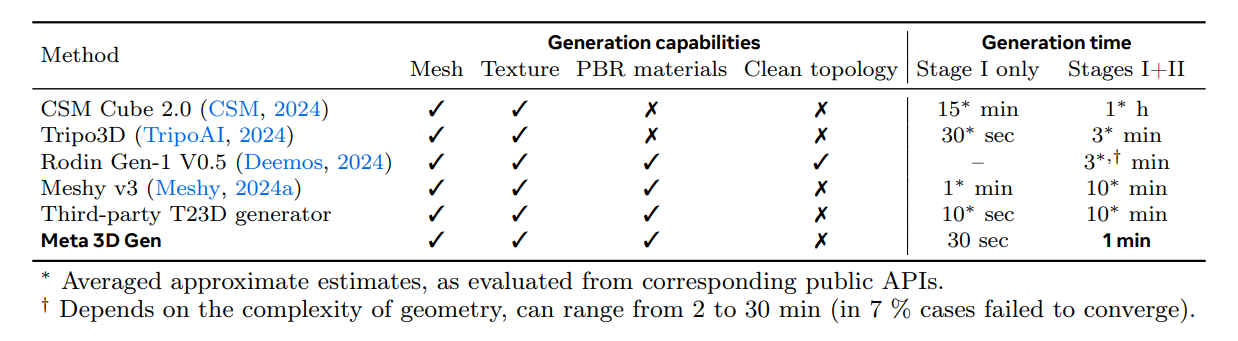

Meta's latest AI model, 3DGen, can generate high-quality 3D content within 1 minute based on user input.

On July 2, Meta released a research paper unveiling its latest AI model—Meta 3D Gen (3DGen).

According to Meta, 3DGen can rapidly generate 3D content with high-resolution textures and material maps in less than 1 minute, which is 3-10 times faster than existing systems. It can also swiftly iterate on existing 3D assets by adjusting textures based on new input cues within 20 seconds.

PBR makes it clear.

The paper introduces that 3DGen features three major technological highlights: high-speed generation, high fidelity, and support for Physically-Based Rendering (PBR), with PBR being the key focus.

PBR can simulate the physical behavior of light on the surface of objects. It considers the effects of lighting, material properties, and environmental factors on the appearance of objects. Based on different surface characteristics (roughness, metallic properties, etc.), it calculates the reflection, scattering, and absorption of light rays, thus achieving more realistic and accurate rendering effects.

Previously, AI-generated 3D assets often lacked realistic lighting and material properties, limiting their practicality in applications such as game development, VR/AR, and special effects in movies.

By supporting fully 3D models with PBR at the underlying mesh structure, the generated 3D assets can be used for realistic modeling and rendering applications. Additionally, 3DGen separates the underlying mesh model from textures, allowing users to adjust texture styles without modifying the underlying model.

Therefore, PBR is likely to bridge the longstanding gap between AI-generated content and professional 3D workflows, seamlessly integrating AI-created assets into existing workflows.

From Text to 3D?

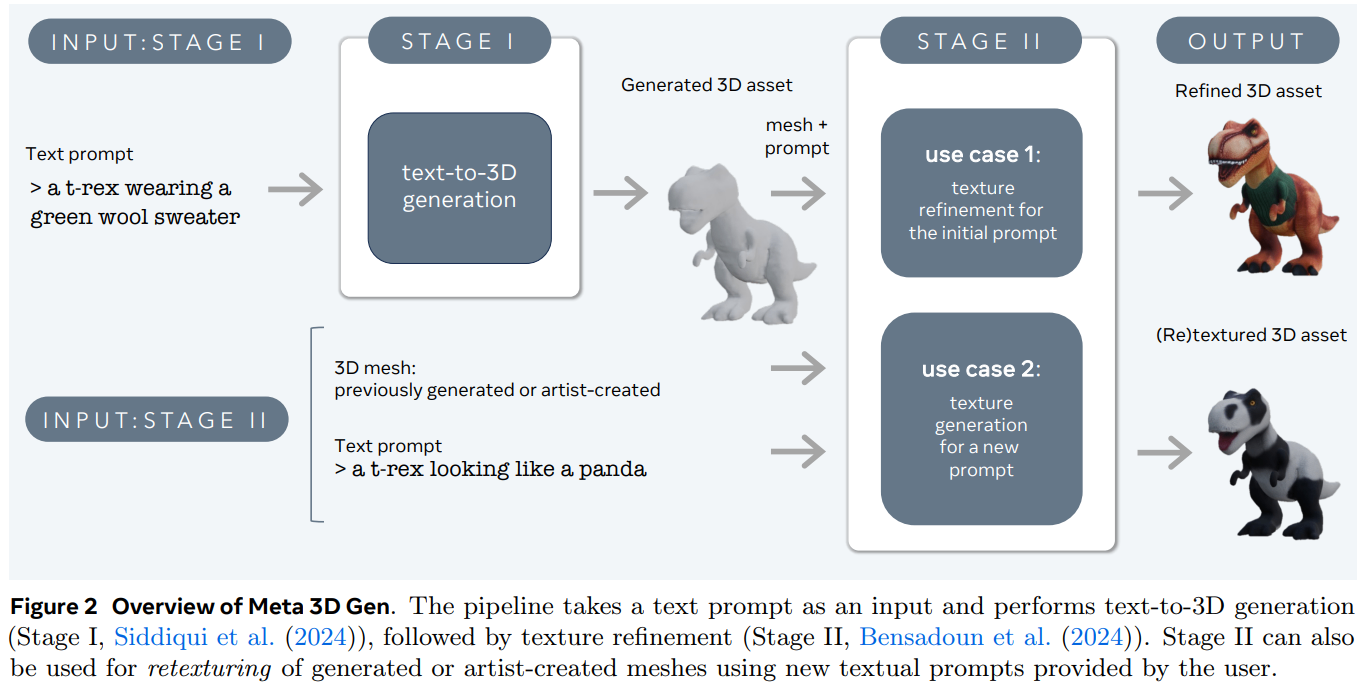

In the paper, Meta extensively discusses 3DGen's operational principles.

3DGen is composed of two key technological components: AssetGen, the "text-to-3D" generator, and TextureGen, the "text-to-texture" generator.

Specifically,

- Text to Image: AssetGen is primarily used to create initial 3D assets, which are 3D mesh materials with rough textures and PBR material maps. This process takes only 30 seconds.

Initially, a multi-view, multi-channel image generator is used to produce multiple images, followed by generating a consistent view of the object.

Then, the reconstructed network extracts the initial version of the object in volumetric space, performs mesh extraction, and establishes its 3D shape and initial texture.

- Image to 3D: TextureGen refines textures for higher quality or different styles. This process only takes 20 seconds.

By combining the text-to-image model in 2D space with 3D semantic conditions, and utilizing the generated results in view space and UV space, the initial 3D assets are integrated with high-resolution UV texture mapping, maintaining fidelity to instructions while enhancing texture quality.

In summary, AssetGen and TextureGen are two complementary models that together achieve highly complementary effects in view space (object images), volumetric space (3D shape and appearance), and UV space (textures), significantly improving the effectiveness of 3D generation.

In the evaluation phase, Meta invited both professional artists and ordinary users to rate the quality of generated outputs, selecting multiple currently accessible 3D models for comparison.

Ultimately, 3DGen scored higher than other models in fidelity evaluations for both 3D shape generation and texture generation. When categorized by types of generated objects, 3DGen ranked first in fidelity for objects and composite scenes, but slightly lower for fidelity in human figures. Moreover, professional artists noted that 3DGen's processing speed varies significantly, being up to 60 times faster.

Conclusion

Currently, 3D generation technology has been applied across various industries. For instance, Meshy AI, a startup in 3D generation, has released the free 3D model Meshy, which has gained significant adoption among independent game developers.

While Meta's integration of AssetGen and TextureGen is straightforward, it has proposed two promising research directions: generation in view space and UV space, and end-to-end iteration for texture and shape generation.

Undeniably, the rapid advancement of AI-driven 3D generation technology holds significant implications for technological iteration in the 3D modeling industry, with Meta once again leading the way.

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.