Nvidia in 2024 is undoubtedly brilliant.

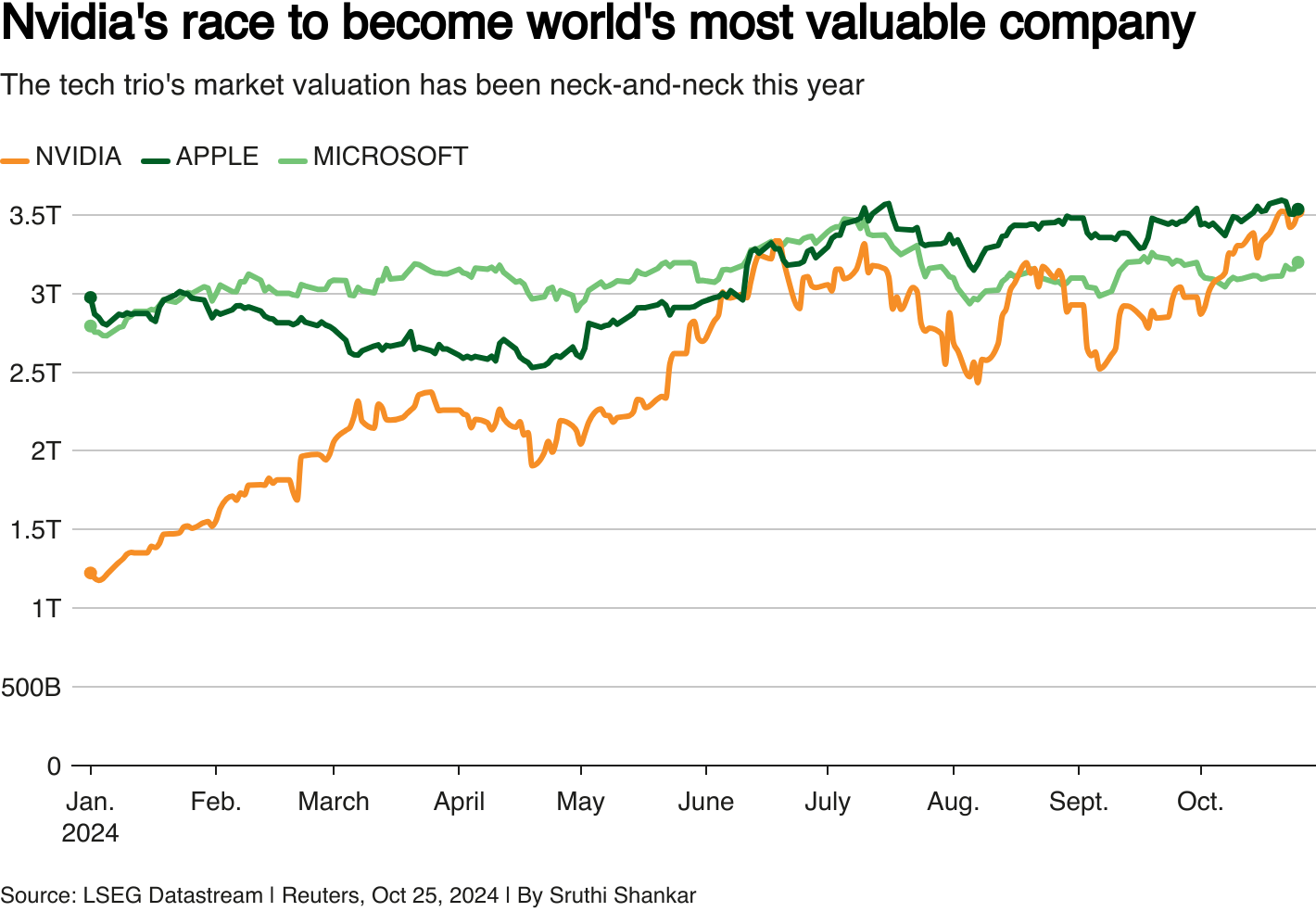

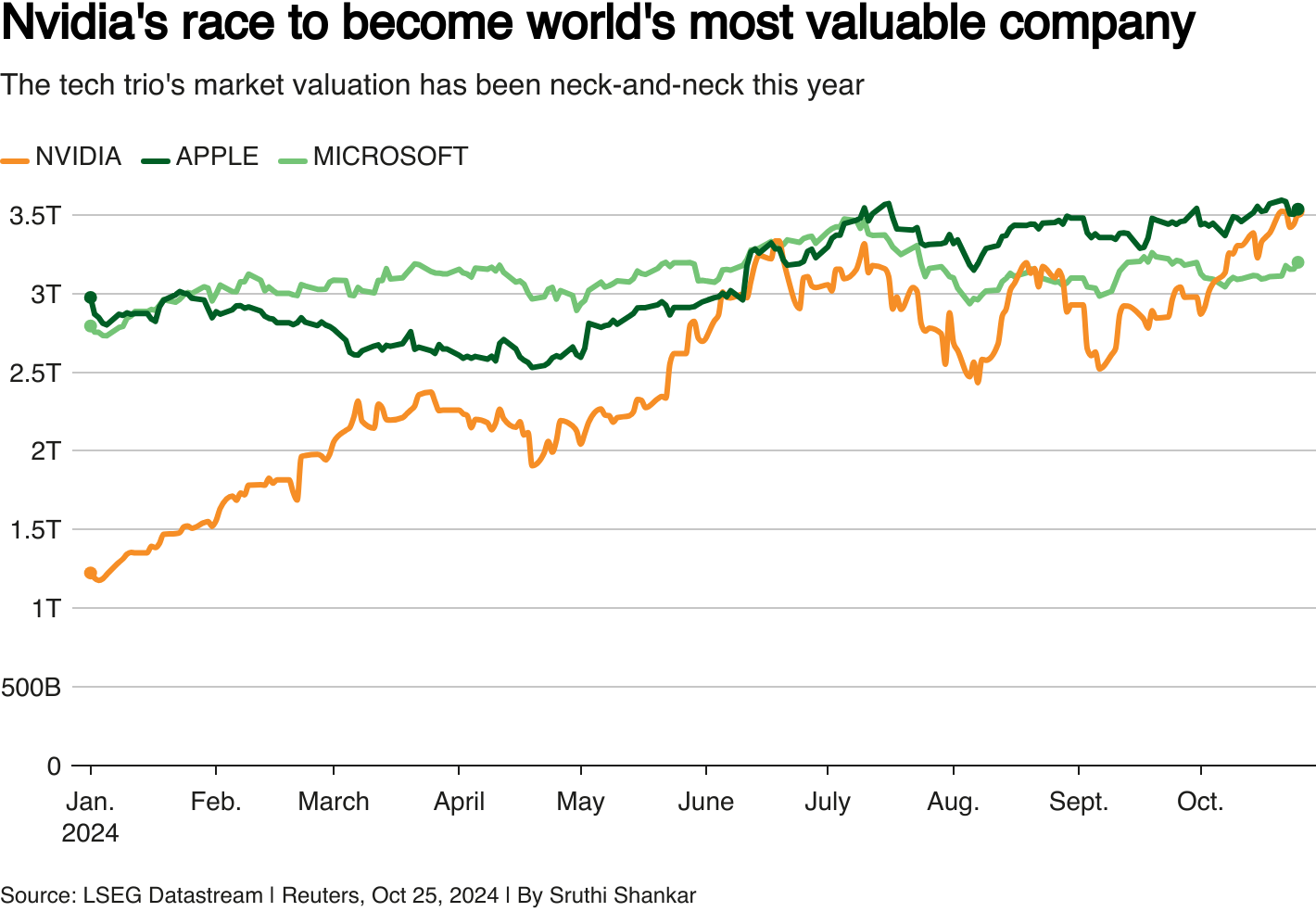

Due to the early deployment of the artificial intelligence track, Nvidia's share price and revenue have soared this year, and even kept pace with Apple repeatedly, becoming the world's largest listed company by market value, exceeding the US$3 trillion mark.Nvidia CEO Huang Renxun has also become one of Silicon Valley's popular executives, meeting frequently with business and politicians.

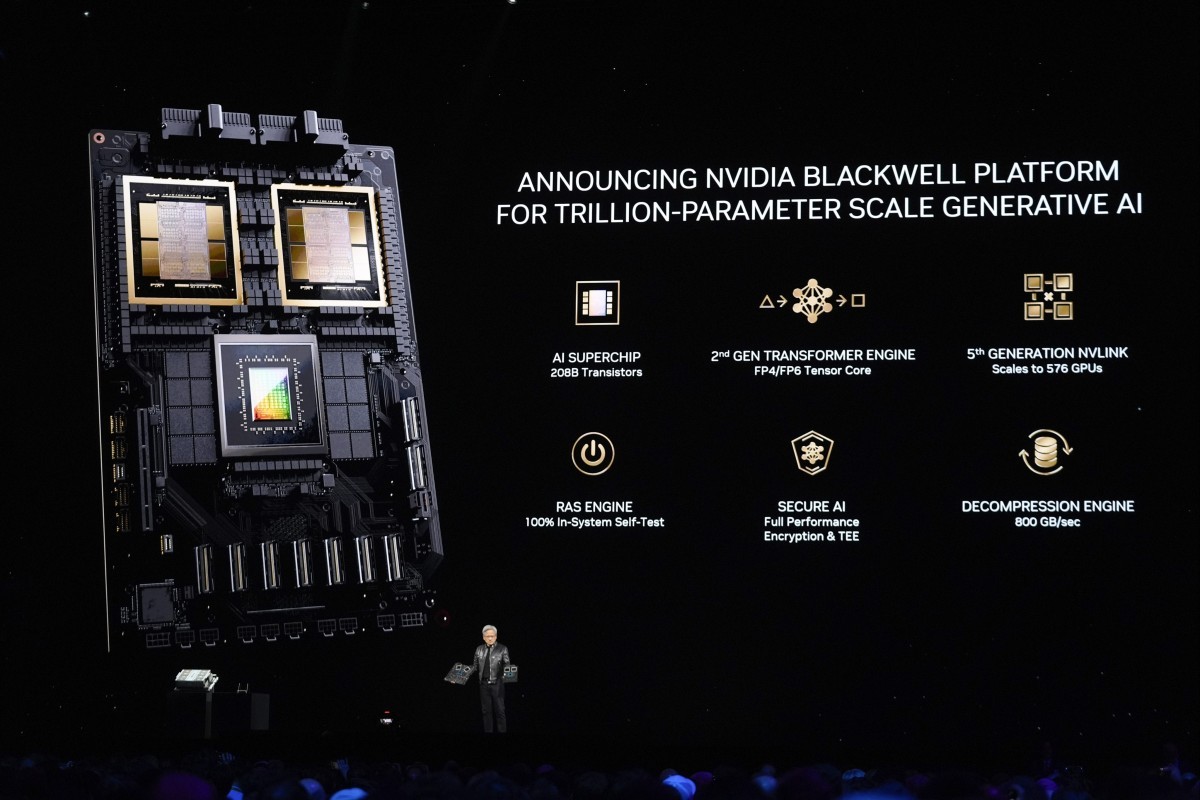

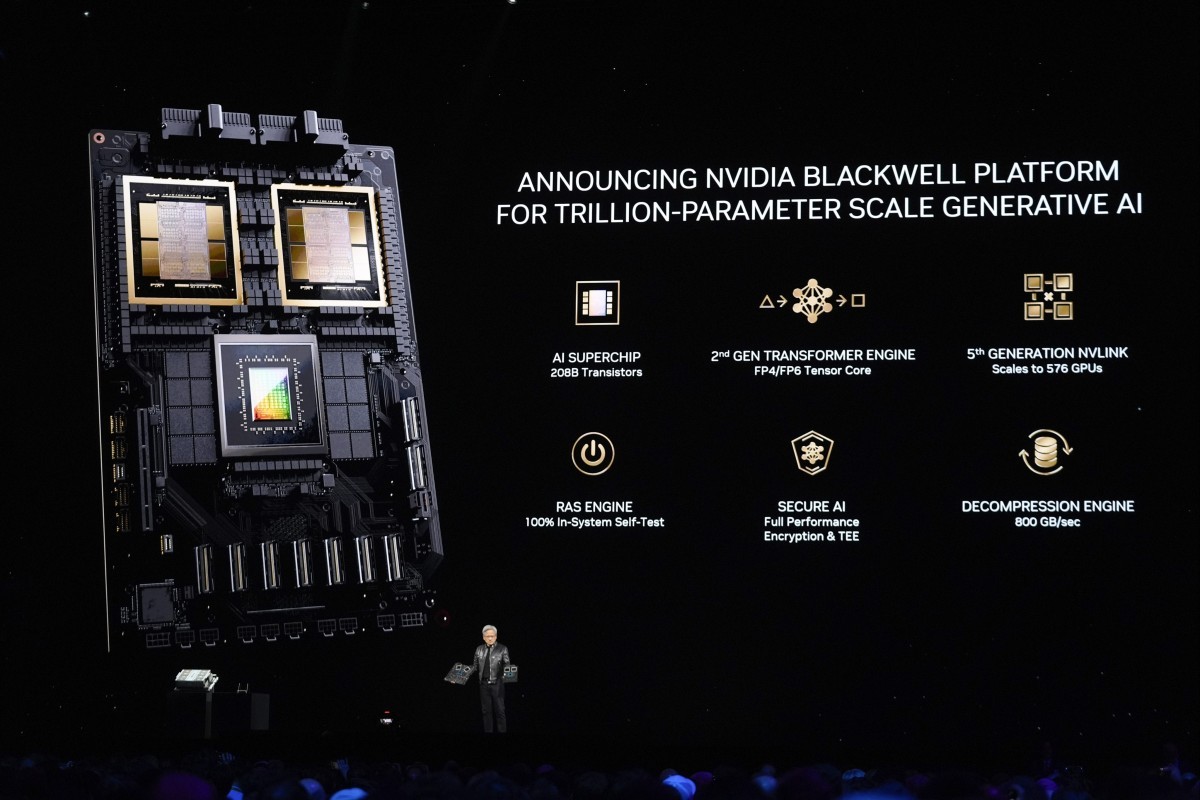

Looking ahead, Nvidia is also increasing production of its high-power Blackwell chips, which are used for artificial intelligence applications.The company expects to ship billions of dollars worth of such hardware products in the fourth quarter alone, and expects more shipments in the coming year.

Daniel Newman, CEO of Futurum Group, said Nvidia has hardware and software adapted to the era of artificial intelligence computing.They are connected inside and outside the server rack, and the software is very popular among the developer community.

But competitors will not sit still-2025 may be a critical year for Nvidia to face challenges.

In terms of hardware design, companies like AMD are trying to poach Nvidia's customers and erode its estimated 80% to 90% market share.Even Nvidia's own customers are developing chips to reduce their reliance on the graphics giant's semiconductors.

In addition, Broadcom's share price has risen 113% this year.Its CEO Hock Tan said AI alone could bring $60 billion to $90 billion in opportunities for the company in 2027.Broadcom is also considered a potential competitor to Nvidia.

Still, analysts say it will be a difficult task for any company that wants to challenge Nvidia.Overthrowing its status as the king of AI is almost impossible, at least in 2025.

Nvidia-the overlord of the AI market

Nvidia gained a head start in the AI market with its early investments in AI software that unlocked the use of its graphics chips as high-performance processors.And it has managed to maintain its leading position in the field thanks to continued improvements in its hardware and its Cuda software, which allows developers to build applications for their chips.

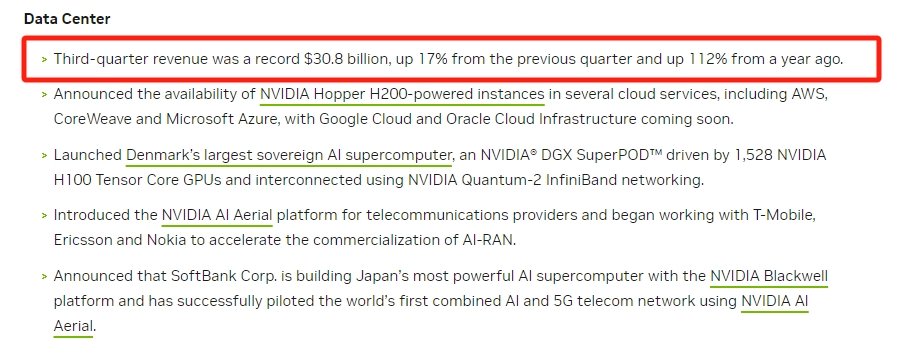

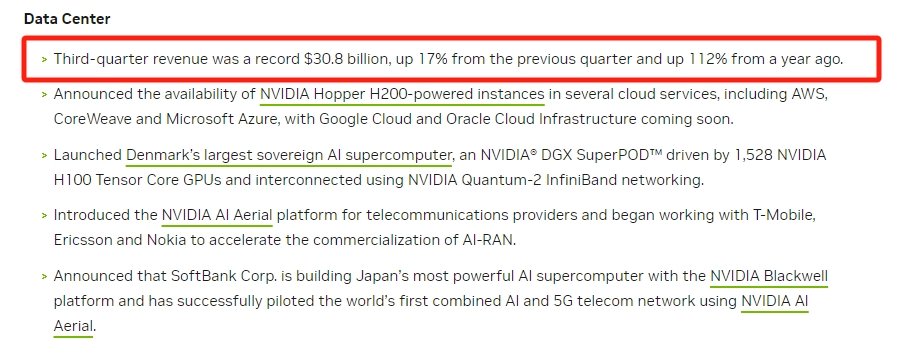

As a result, so-called ultra-large-scale cloud service providers, including Microsoft (MSFT), Alphabet's Google, Amazon (AMZN), Meta (META), etc., continue to invest in purchasing as many Nvidia chips as possible.In its most recent quarter, Nvidia reported total revenue of $35.1 billion.Of this,$30.8 billion, or 87%, came from its data center business.

"Everyone wants to build and train these huge models, and the most effective way is to use CUDA software and Nvidia hardware," said Bob O'Donnell, president and principal analyst of TECNalysis Research.

Nvidia is also expected to continue to power much of the AI industry in 2025.The company's Blackwell chips, the successor to the Hopper family of processors, are currently in production-and its customers, such as Amazon, are already adding new cooling capabilities to their data centers to cope with the huge heat generated by the processors.AI infrastructure continues.

"I don't know about the current backlog of Nvidia chip orders, but it's close to a year, if not a year," O'Donnell said."So, most of the products they may produce next year are almost sold out.”

As hyperscale cloud service providers require at least the same level of capital expenditures in 2025 as in 2024, you can expect some of that to be used to buy Blackwell chips.

Risks facing Nvidia

Although Nvidia will retain control of the AI crown, there is no shortage of challengers who want to seize its throne.AMD and Intel (INTC) are top contenders among chipmakers, and both have products on the market.AMD's MI300X series of chips is designed to deal with NVIDIA's H100 Hopper chips, while Intel has its Gaudi3 processor.

However, AMD is better positioned to grab market share from Nvidia as Intel continues to struggle in its transformation efforts and seeks a new CEO.But even AMD will struggle to break Nvidia's lead.

"What AMD needs to do is make the software truly available and build systems with more needs...…Together with developers, ultimately, this could create more sales,"Newman said."Because these cloud service providers will sell the products their customers require.”

It's not just AMD and Intel.Nvidia's customers are increasingly developing and promoting their own AI chips.Google has Broadton-based tensor processing unit chips (TPUs), while Amazon (AMZN) has its Trainium 2 processor, and Microsoft (MSFT) has its Maia 100 accelerator.

There are also concerns that the shift to "inferential AI models" will reduce the need for high-performance Nvidia chips.

Technology companies develop AI models by training them on large amounts of data, which is often referred to as the training process.Training requires very powerful chips and a lot of energy.Reasoning, or actually using these AI models consumes less resources and energy.As reasoning becomes a larger part of the AI workload, it is believed that companies will reduce the need to buy so many Nvidia chips.

Huang Renxun said he was ready for this, explaining at various activities that Nvidia's chips are as good in reasoning as in training.

Even if Nvidia's market share declines, it doesn't necessarily mean its business will be worse than before.

"This is definitely a case of upgrading all ships," Newman said."So even if faced with more competition, which I think they will definitely have, that doesn't mean they will fail.It's just people are making a bigger cake.”