After the U.S. stock market closed last Wednesday, Nvidia delivered a seemingly eye-catching report card-revenue in the fourth quarter of fiscal year 2025 increased by 78% year-on-year to US$39.3 billion, and annual revenue exceeded US$100 billion for the first time., reaching US$130.5 billion, a year-on-year increase of 114%.

However, this financial report, which analysts called "textbook growth", failed to prevent its share price from plummeting 8.4% that day, its market value evaporated by US$274 billion, and even fell below the psychological threshold of US$3 trillion.This dramatic reversal reflects the market's calm review of the frenzied investment in AI tracks, and also reveals Nvidia's complex situation under the multiple attacks of technology iteration, competitive landscape and capital expectations.InvalidParameterValue

The market's "high expectation fatigue" for Nvidia was the core trigger of the sell-off.

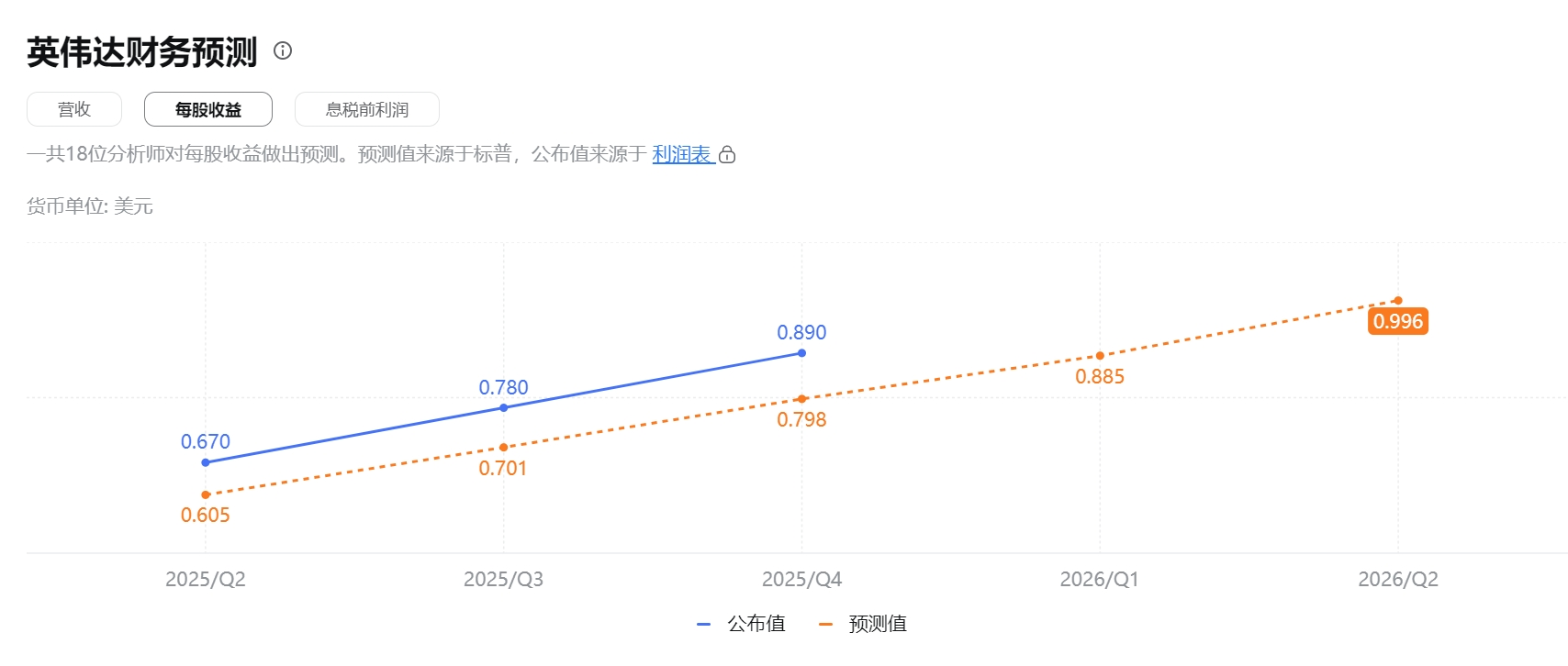

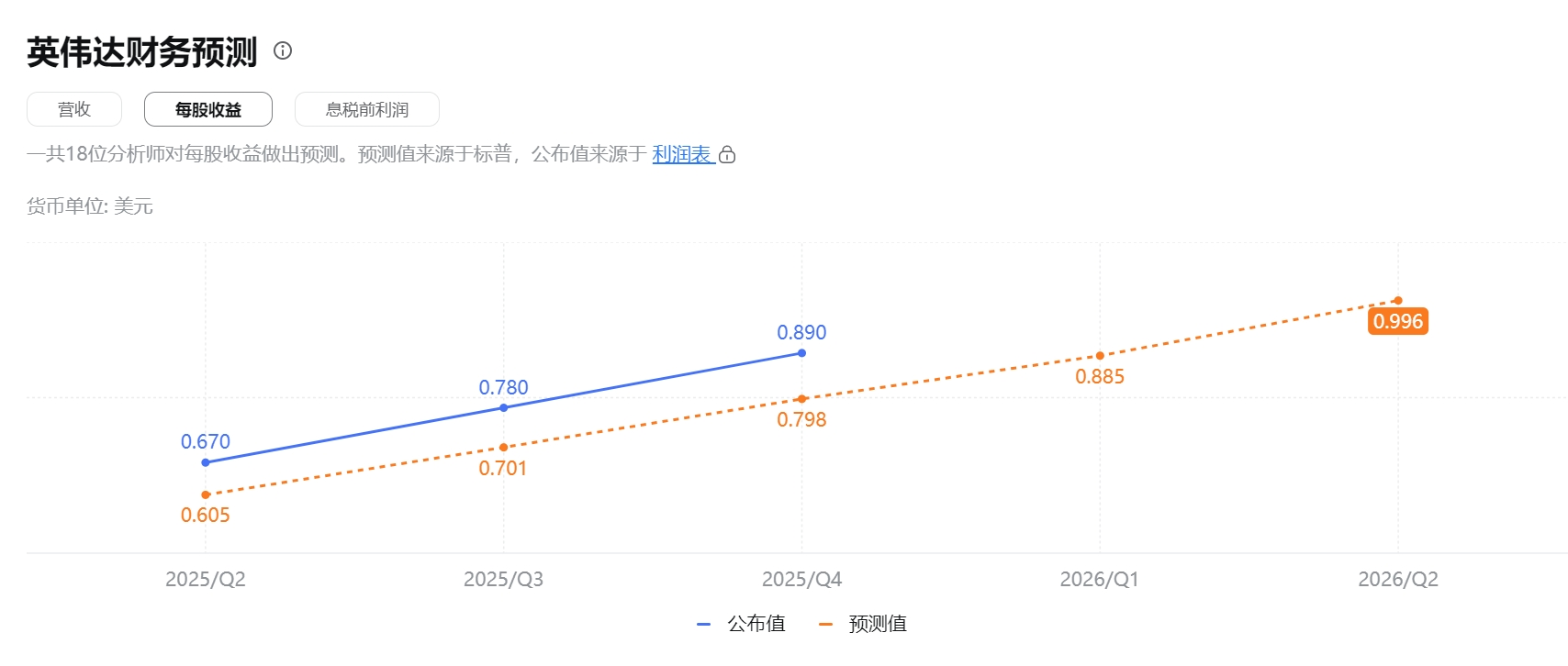

Although its data center business revenue in the fourth quarter increased by 93% year-on-year to US$35.6 billion, far exceeding market expectations, investors have become accustomed to viewing "exceeding expectations" as the norm for Nvidia.When management gave revenue guidance of US$43 billion for the first quarter of fiscal 2026 (only 2% higher than the market consensus), this "mediocre exceeding expectations" turned out to be negative.

More importantly, the forecast for gross profit margin dropped from 73% to 71%, exposing the pressure of rising supply chain expedited fees and cost control, which touched the market's sensitive nerves about the narrowing of the profit moat.JPMorgan analyst Harlan Sur pointed out that Nvidia is accelerating the delivery of Blackwell chips by sacrificing some of its profits. Although this "price-for-volume" strategy can consolidate market share, it may weaken its high premium aura.InvalidParameterValue

Deeper market anxiety stems from the potential disruption of technological paradigms.

The open source reasoning model R1 launched by DeepSeek, a China artificial intelligence company, achieves the same performance at 1/10 of the computing power cost, directly challenging NVIDIA's business model of "computing power is power."Although CEO Huang Renxun emphasized at the earnings conference that "reasoning itself is still a computation-intensive task" and tried to prove technical advantages with Blackwell chips 'US$11 billion quarterly sales, the market has begun to reassess the technical threshold and competitive landscape in the reasoning stage.The emergence of the R1 model not only reduces the reliance of AI reasoning on high-end GPUs, but also may push the industry to a new balance of "low-cost computing power + algorithm optimization", which poses a long-term threat to Nvidia, which relies on hardware sales growth.InvalidParameterValue

Faced with doubts, institutional bulls led by Bank of America still showed firm confidence.

Bank of America analyst Vivek Arya has repeatedly reiterated his "buy" rating, raised its year-end price target from $190 to $200, and predicted that Nvidia will generate $200 billion in free cash flow in the next two years, a figure comparable to Apple's cash generation capabilities.

The logic is: First, Nvidia's dominance of 80%-85% of the US$400 billion AI chip market will be difficult to shake in the short term; second, giants such as Meta and Microsoft have not reduced AI capital expenditures, but have continued to increase data center construction to provide Nvidia with certainty. Demand; more importantly, the explosive growth of Blackwell chips (sales of US$11 billion in the fourth quarter) has verified its irreplaceability in the wave of generative AI.This trinity advantage of "hardware + software + ecology" allows Nvidia to still be at least one step ahead of its competitors in terms of customer stickiness and technology iteration speed.InvalidParameterValue

However, Nvidia's long-term concerns are emerging.

First, founder Huang Renxun's personal influence is deeply bound to the company's destiny, and his retirement risk may trigger a management fault crisis.Historical experience shows that the intergenerational inheritance of technology giants is full of variables-Microsoft's transformation from Ballmer to Nadella was lucky, while Intel missed out on the mobile revolution in the era of professional managers.Second, China chip companies are catching up faster than expected.Although NVIDIA relies on the CUDA ecosystem and process technology to build barriers, the continued breakthroughs of SMIC and other manufacturers in mature processes and the penetration of domestic GPU courses in universities may gradually erode its ecological advantages in the next 5-10 years.Third, geopolitical risks have intensified.U.S. chip export controls and potential tariff policies have forced Nvidia to walk a tight wire between compliance and market expansion, while the rise of alternatives in the China market may weaken its global pricing power.InvalidParameterValue

The essence of the current stock price fluctuations is the widening of the market's differences in AI investment logic.

Bears believe that DeepSeek's technological breakthrough heralds diminishing marginal benefits of the computing power arms race. Coupled with rumors that customers such as Microsoft may reduce data center leases, the risk of overheating investment in AI infrastructure is accumulating.Optimistic people see that the application scenarios of generative AI have just touched the tip of the iceberg in the fields of consumer electronics, medical care, autonomous driving, etc., and Nvidia's parallel computing capabilities still have huge room for release.This divergence is particularly evident in the options market: After the earnings report was released, some traders made a huge bet that Nvidia's share price would fall to US$115 before March 7, and the number of short contracts surged by 300,000, forming a sharp confrontation with the firm positions of institutional bulls.InvalidParameterValue

Standing at the crossroads of industrial transformation, Nvidia's response strategy will determine whether it can cross the cycle.In the short term, accelerating the mass production of Blackwell chips and deepening solution cooperation with companies such as Accenture and ServiceNow are the keys to maintaining its growth momentum.In the medium and long term, it is necessary to add to the ecological moat-bind developers through the continuous iteration of the CUDA platform, while exploring AI software services and subscription models, and gradually reduce reliance on a single source of revenue for hardware sales.As insiders of the company said: "As long as the speed of innovation exceeds the speed of market questioning, the stock price will eventually return to value."This big bet with a market value of US$3 trillion may have just entered the deep waters.

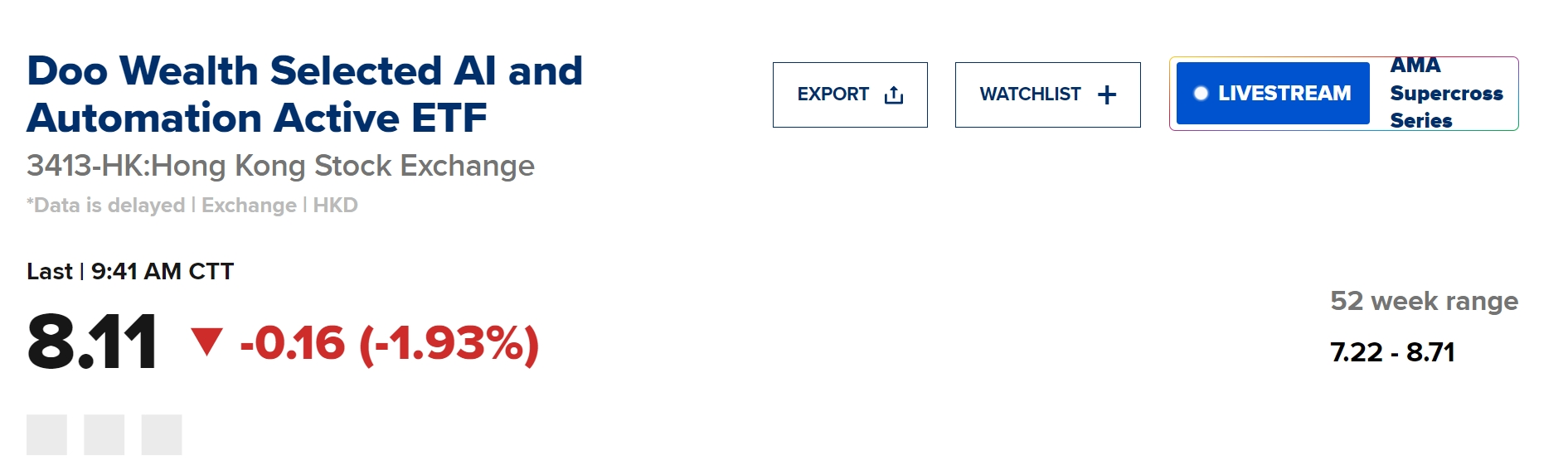

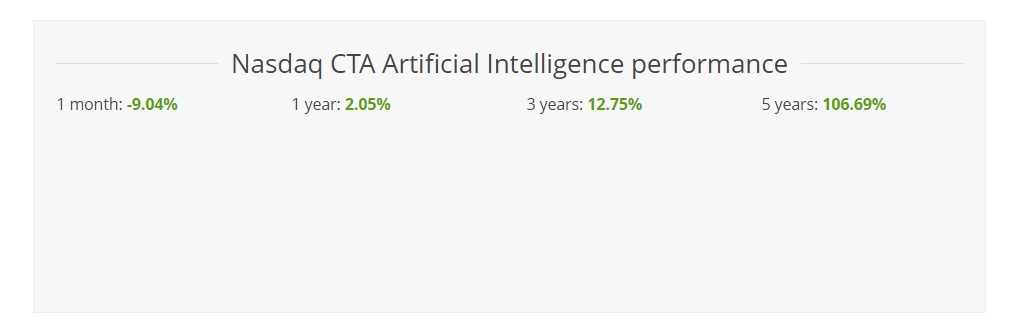

Faced with the surging wave of AI, how should we ordinary people invest?

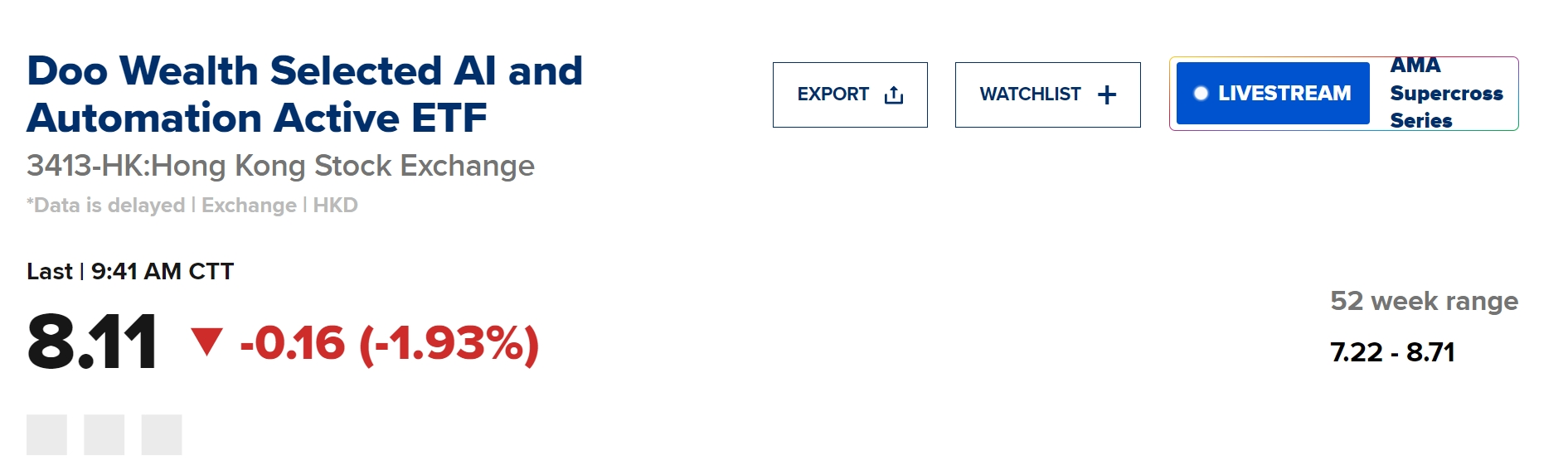

The stock prices of companies related to the concept of artificial intelligence are generally higher, such as NVIDIA, Oracle, Google, Microsoft, Meta, etc. The capital cost for ordinary investors to hold multiple stocks is higher.In contrast, artificial intelligence-related ETFs have the advantage of low funding barriers, and generally only costs more than 100 US dollars to purchase one piece (100 copies).

ETFs have a rich selection of products, covering upstream and downstream companies in the artificial intelligence industry chain. Investors can achieve risk diversification and share the dividends of industry development without in-depth research on individual stocks.In addition, ETFs have no risk of suspension or delisting, and can trade normally even in a bear market, providing investors with an opportunity to stop losses.Based on its advantages such as low threshold, transparent trading, rich selection, high stability and support for on-site trading, ETFs have become an ideal choice for ordinary investors and novice investors to participate in the artificial intelligence market.

Originally, the emergence of Deepseek caused a hidden worry in the market's demand for computing power.DeepSeek caused panic because it demonstrated the possibility of training AI models at a fraction of the cost of other models.This could reduce the need for data centers and expensive, advanced chips.But in fact, DeepSeek has pushed the AI industry to develop reasoning models that require greater resource requirements, which means computing infrastructure is still necessary.

In the future, according to predictions, although AI's future demand for computing power will be only a small part of the current demand, the increased demand for inference models when answering user questions may make up for this.As companies discover that new AI models are more powerful, they are increasingly calling on these models.This shifts the need for computing power from training models to using models, or "reasoning" in the AI industry.

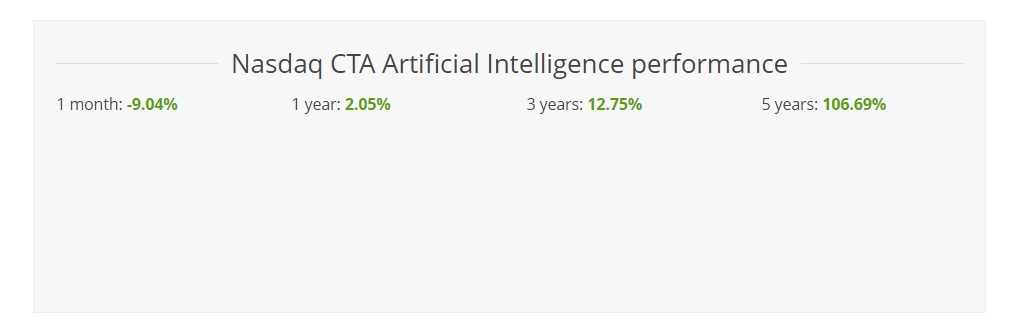

The following are some popular artificial intelligence ETF products on the market, for example only and no recommendations:

Venture capitalist Tomasz Tunguz said investors and large technology companies are betting that demand for AI models could increase by a trillion times or more over the next decade due to the rapid spread of inference models and AI.

This shows that the era of large-scale infrastructure for artificial intelligence continues.