Simply sort out the five highlights of 2024 Google I / O Developer Conference

Signals tell us that AI models have become Google's top priority.

Recently, Google and Microsoft have engaged in fierce competition in the field of artificial intelligence.

The day before, OpenAI just released a major update GPT-4o, subverting the previous voice search mode, the whole network screen, the pressure to Google。The next day, May 15, Google lived up to expectations and released more than a dozen product updates at the 2024 I / O Developer Conference, defending the status of the "Industry leader" in the artificial intelligence industry.

In the two days before and after, the feast of the artificial intelligence industry subverted the tradition of previous technology events, the Internet giants around the AI track to play inextricably.During the I / O opening ceremony, which lasted less than two hours, Google CEO Sundar Pichai mentioned "AI" 121 times with a group of Google executives.。Demis Hassabis, co-founder and CEO of Google DeepMind, gave his first speech at the I / O conference, second only to Pichai。Signals tell us that AI models have become Google's top priority.

Let's take a look at the five highlights of this 2024 I / O Developer Conference.

The first highlight, Google released the voice dialogue artificial intelligence assistant Gemini Live, users can talk to Gemini on the mobile application.

Currently, the app has been updated on iOS and Android platforms, Gemini will provide a full-screen experience with cool audio waveform effects。Users can speak at their own pace, Google will adjust according to your rhythm, and interrupt Gemini at any time when it replies, add new information or ask it to explain, the function is quite powerful.

This time, Google offers Gemini users 10 different sound choices.As part of "Project Astra," this feature will soon be upgraded to a conversation video feature。Google also played a video demonstration, its artificial intelligence agent "Project Astra" can identify objects displayed on the camera screen, and understand the code displayed on the computer screen, among other tasks.

The second highlight, Google also released artificial intelligence generation tools for images, videos and music, called Imagen 3, Veo and Music AI Sandbox, respectively.

One of the most eye-catching, no doubt or Veo.

As a brand new video generation model, Veo can help users create and edit high-quality 1080p videos with different visual styles for more than 70 seconds with only one text, image or video prompt.Not only that, but unlike Sora, users can customize a variety of style modes and click on the growth time to make Veo-generated videos longer than 1 minute.Google says some of Veo's features will be available at Labs.Open to some creators on Google.

The features of Imagen 3 should also not be underestimated.Google says Imagen 3 is more popular among large models that can generate images from text alone。Pichai called Imagen 3 "the best model to render text to date."Users can use Labs.Sign up for Imagen 3 on Google, and developers and enterprise customers can use it later.

Music AI Sandbox is a collaboration between Google and a group of artists from Tubing, with both technology and expertise.Musicians say that AI is like a friend, let you try this and try that, which can liberate their creativity and allow them to create music more efficiently.

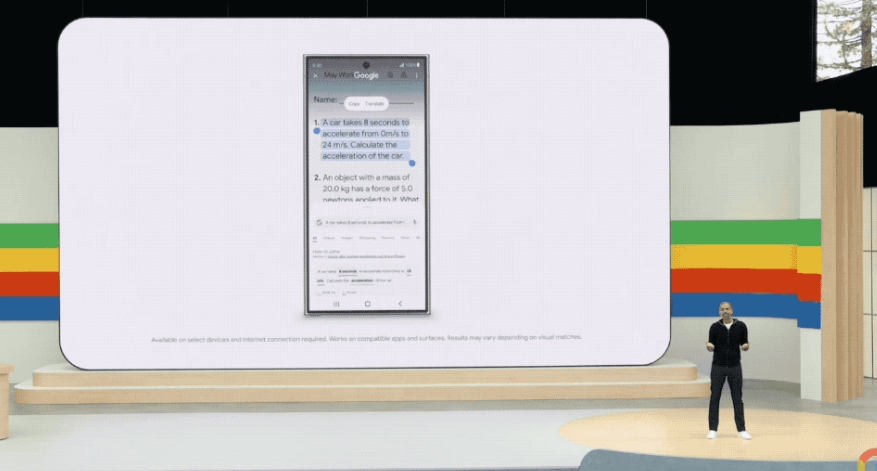

The third highlight, Google is about to introduce Gemini-supported AI Overview features in browser search.

The new features will enable browsers to support multiple rounds of reasoning, break down complex problems, compress research that would otherwise take minutes or even hours to complete in seconds, and support asking questions about videos in search.

AI Overview will bring a completely different experience to Google Search, a tool that allows the browser to aggregate search content on the top page and use other native Google apps (such as Google Maps) to answer questions entered by users and respond to video input.Pichai announced that Google will begin rolling out an improved Gemini-based search experience to every U.S. user today and will open it to more countries this week.Liz Reid, head of Google search, stressed that Google's AI search overview has three unique advantages: real-time information, ranking and quality system, and Gemini model capabilities.

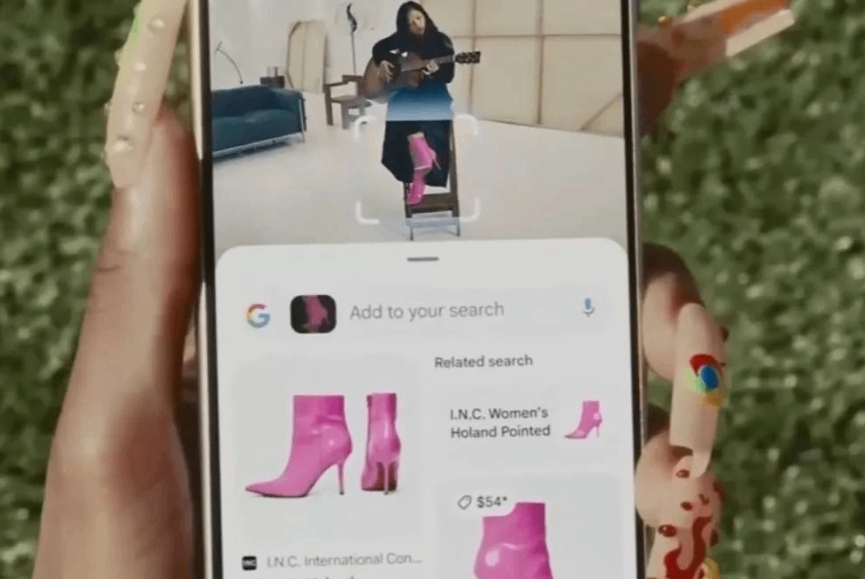

The fourth bright spot, Google will gradually integrate artificial intelligence into Android devices.

At this conference, Google announced that its artificial intelligence technology will be integrated into Android devices through Gemini Nano (the smallest Gemini model) to run artificial intelligence locally.

The company said that later this year, Pixel phones will be multi-modal artificial intelligence capabilities through Gemini Nano.A Google employee explained at the meeting: "This means that your phone can understand the world in a way you understand," adding, "With Google Nano, devices can react to text, visual and audio input."

The model uses the context collected from the user's phone and runs the workload locally on the device, which can minimize some privacy issues.Locally running artificial intelligence technology minimizes the latency that may occur when running artificial intelligence on a remote server, and because all work is done on the device, it can work without an internet connection.

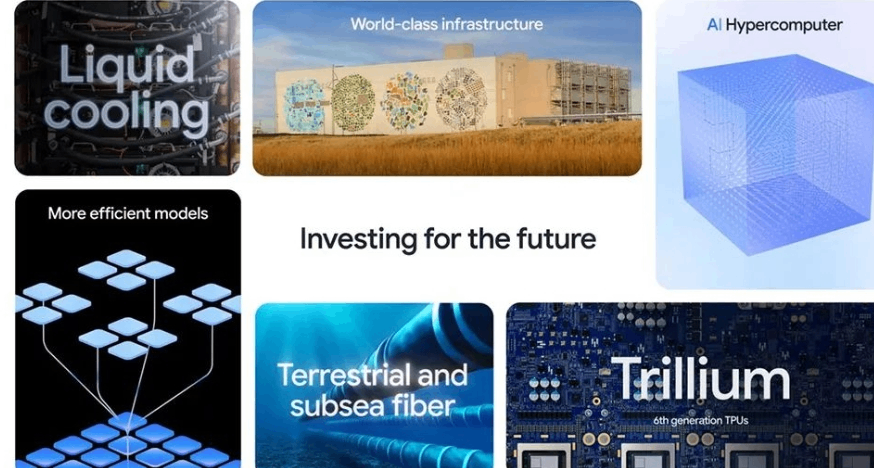

The fifth highlight, at the conference, Google announced that it will improve its artificial intelligence model Gemini 1.5 Pro, launching the new Gemini 1.5 Flash models, two new Gemma models, and the release of the new tensor processing unit (TPU).

First, Gemini 1.5 Pro changes include improvements to translation, coding, reasoning and other uses to improve quality.New Gemini 1.5 Flash is a smaller model, optimized for clearer tasks with speed first.Gemini 1.5 Pro and Gemini 1.5 Flash is available in preview from this Tuesday and will be fully available in June.

Secondly, Google has also introduced two new models for its "lightweight open model" series Gemma, PaliGemma and Gemma 2.PaliGemma, a visual language open model that the company says is the first of its kind, will launch Tuesday. Gemma 2, the next-generation Gemma, will launch in June.

Finally, Google released the sixth-generation TPU-Trillium, which the company said improved computing performance per chip by 4.7 times compared to the previous generation.The company also reiterated that it will be one of the first cloud providers to offer Nvidia Blackwell GPUs in early 2025.

Pichai said Google is still in the early stages of transforming its AI platform, and "we see tremendous opportunities for creators, developers, startups and everyone."Helping drive these opportunities is what our Gemini era is all about."

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.