Apple AI “Off the Hook”: OpenELM's Freedom on Mobile Devices

On April 24, Apple released OpenELM, an open source AI model that can run freely on mobile phones, laptops and other devices.

With the emergence of ChatGPT, AI has become one of the competitive tracks for major technology companies. 3C manufacturers such as Samsung and Xiaomi have also begun to comprehensively apply large language models (LLMs) in their smartphones, tablets, and other end devices, leading to a surge in popularity of enhanced photo, text, and video effects. However, for a long time, Apple seemed to have made little progress in the AGI field, rarely revealing its product's AI capabilities and preferring to rely on third-party tools.

During the February earnings call this year, Apple CEO Tim Cook revealed the company's AGI plans for the first time, announcing that they would integrate AI technology into their software platforms (iOS, iPadOS, and macOS) within the year. He believes that Apple has tremendous opportunities in the AGI and AI fields and that the company will continue to invest in AI research, with plans to share details of their work in this field later this year.

On April 24, Apple released its first efficient language model, OpenELM, on HuggingFace, featuring capabilities such as text generation, code, translation, and summarization. Furthermore, ahead of WWDC 2024, Apple also open-sourced the weights and inference code of this model, as well as the dataset and training logs, and made its neural network library, CoreNet, available to the public.

According to reports, OpenELM is positioned with ultra-small-scale models, with four parameter scales of 270M, 450M, 1.1B, and 3B, each with a pre-training and guidance version. Compared to Microsoft's Phi-3 Mini with 38B and Google's Gemma with 20B, OpenELM has lower operating costs and can be supported on devices such as smartphones and laptops without relying on cloud servers.

The OpenELM series is designed based on neural networks, also known as the "pure decoder Transformer architecture," which is also the basis for Microsoft's Phi-3 Mini and many other LLMs. It is also an open-source library designed by CarperAI, aiming to optimize searches using language models in code and natural language. In this architecture, LLMs are composed of interconnected components, also known as "layers." The first layer is responsible for receiving and processing user commands, sending them to the next layer after processing; after multiple repetitions, the processing results are input to the last layer, ultimately outputting a response.

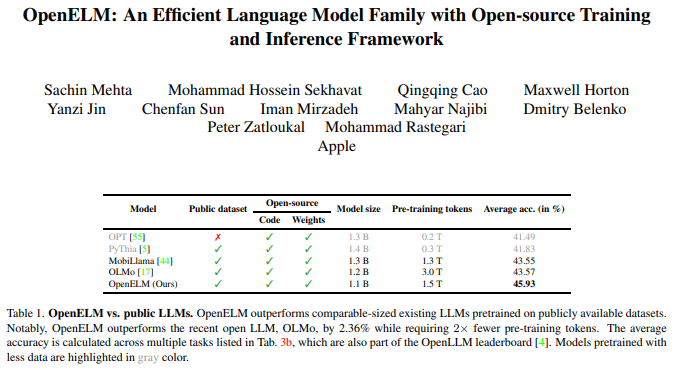

Therefore, by employing a layer-wise scaling strategy, OpenELM effectively allocates parameters for each layer of the Transformer model, thereby enhancing accuracy. Compared to the OLMo open LLM released in February of this year, OpenELM achieves a 2.36% improvement in accuracy with a parameter scale of around 10B, while reducing the number of tokens required for pretraining by 50%.

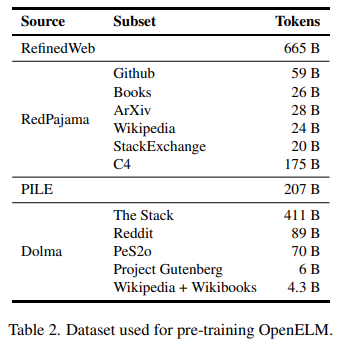

In terms of the training process, Apple utilized CoreNet as the training framework and conducted 350,000 iterations of training using the Adam optimization algorithm. Unlike previous methods that only provided model weights and inference code for pretraining on private datasets, OpenELM opted to train on public datasets. According to information on the HuggingFace website, the pretraining dataset includes RefinedWeb, deduplicated PILE, subsets of RedPajama, and subsets of Dolma v1.6, totaling approximately 1.8 trillion tokens.

According to relevant papers released by the project researchers, the reproducibility and transparency of LLM are crucial for advancing open research, ensuring the credibility of results, and investigating data and model biases as well as potential risks. Therefore, Apple has also released code to convert the model to the MLX (Machine Learning Accelerator) library for inference and fine-tuning on Apple devices.

The research team of OpenELM stated, "The comprehensive release aims to enhance and consolidate the open research community, paving the way for future open research endeavors."

Shahar Chen, CEO and Co-founder of AI service company Aquant, remarked, "Apple's release of OpenELM marks a significant breakthrough in the AI domain, providing more efficient AI processing capabilities and serving as an ideal choice for mobile or IoT devices with limited computing power. Consequently, this model enables rapid decision-making from smartphones to smart home devices, successfully tapping into the technological potential of AI in everyday life."

·Original

Disclaimer: The views in this article are from the original Creator and do not represent the views or position of Hawk Insight. The content of the article is for reference, communication and learning only, and does not constitute investment advice. If it involves copyright issues, please contact us for deletion.